20 Software Engineers for Every 1 Chip Designer—AI Is Trying to Close the Gap

20 software engineers exist for every hardware engineer in the US. AI tools are turning software talent into chip designers—but senior expertise still wins

20 software engineers exist for every hardware engineer in the US. AI tools are turning software talent into chip designers—but senior expertise still wins

OpenAI Syndrome hits Microsoft, AMD, Oracle: Strong AI earnings, crashing stocks. MOUs fail to deliver contracts, sparking debt fears & overcapacity risks.

Discover how macOS Quality of Service (QoS) uses Efficiency cores to deliver 12+ hours of battery life. Learn why Apple Silicon outlasts Intel by design.

C2PA to label AI images is failing. Platforms strip metadata, Apple won't join, creators reject labels. Instagram says assume photos aren't real.

Security researcher found RCE in AMD AutoUpdate affecting millions. AMD closed report same day as 'out of scope,' refuses to fix HTTP download flaw.

Ex-Google engineer convicted for stealing AI secrets faces 70 years. Mirrors Taiwan's TSMC case—AI now treated as national security threat, not just IP.

FPT reveals Vietnam's first fully Vietnamese-owned advanced semiconductor testing plant in Hanoi. As global chip tensions rise, this could produce billions of units yearly—but can it rival Taiwan? (148 characters)

RISC-V's customizable design enables innovation but makes verification impossible. Testing trillions of instruction combos risks fragmentation. Read to know more

LLMs choke on million-token contexts, but recursion changes that. Prime Intellect's RLM spawns sub-models to surgically dissect data.

China's Shenzhen EUV prototype is live, torching U.S. chip controls and splitting the $600B market into rival empires. Independence by 2028 means game over for global unity.

ASML fires 1700 mostly managers amid €13.2B Q4 bookings surge from AI boom. Why trim leadership now as chip demand explodes? Streamlining risks execution amid TSMC, Samsung rush. (148 characters)

Apple turns to Intel's 14A process for non-Pro iPhone chips starting 2028, diversifying from TSMC amid capacity strains. As AI demand surges, this supply shift exposes risks in the chip foundry race—leaving premium silicon exclusive.

The era of 'Assembled in India' is ending. The era of 'Owned by India' has begun. From Lava's UK launch to Tata's new fabs, we connect the dots on the 18-month roadmap to Hardware Sovereignty.

For 20 years, the iPhone dictated TSMC's roadmap. That era is over. We analyze the "Revenue Density" math that explains why TSMC is pivoting $56B in Capex toward NVIDIA, leaving smartphone giants to pay the bill.

Apple has secured 100% of TSMC's 2nm capacity, marking the end of the 15-year FinFET era. We analyze why the transistor's shape had to change to Gate-All-Around (GAA).

A routine API outage at 4 AM exposed a critical flaw in my security research. I analyze why relying on "Real-Time Data" is a single point of failure and the mathematical fix for resilient pipelines.

ProLogium has unveiled a superfluidized all‑inorganic solid‑state lithium ceramic battery platform at CES and confirmed construction of its first French gigafactory, aiming to industrialize high‑safety, fast‑charging cells for next‑gen EV and energy storage applications across Europe and beyond.

SpaceX’s upcoming Falcon 9 launch of 36 SDA Tranche 2 Tracking Layer satellites is not just another mission—it is a bulk upload of hypersonic-tracking hardware into MEO that rewires the economics of missile warning. This single flight accelerates the Pentagon’s pivot from a few GEO behemoths to a proliferated, upgradeable sensor mesh built on commercial launch cadence.

XELA's 3D touch sensors just cracked robot dexterity—human-like grip at CES 2026. $500B automation goldrush starts now, sidelining clunky bots forever.

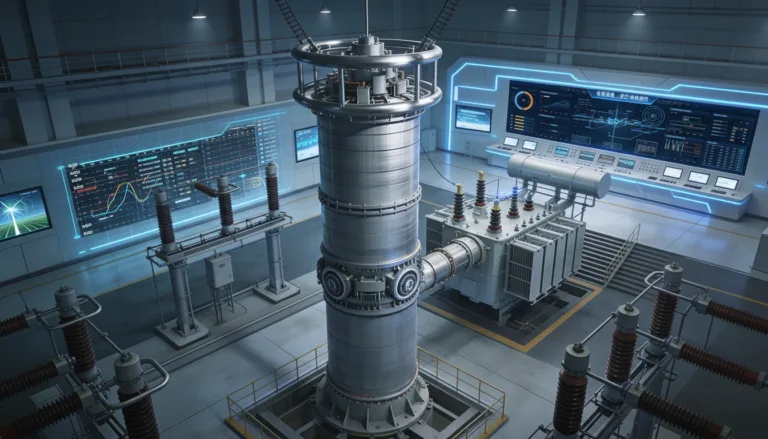

China's hybrid HVDC valve slashes grid losses 50%, powering a $500B renewable revolution. World's first live today—West plays catch-up.