“I think we often underestimate the role of interfaces. When we talk about AI performance, we rush to discuss tensor cores, fused multiply-add units, or TOPS. But none of that works if data can’t flow in and out fast enough. And as models keep growing—moving from millions to billions of parameters—memory access is becoming the real bottleneck.”-Abhinav Kumar

The Interface AI Doesn’t Talk About—But Uses Every Cycle

Every AI accelerator you’ve heard of—whether it’s running inside your smartphone, powering autonomous vehicles, or deployed across hyperscale data centers—relies on moving massive amounts of data. But compute isn’t the only hero here. Behind the scenes, it’s the interconnect that decides whether data reaches the processing units on time.

That’s where AXI comes in.

I think we often underestimate the role of interfaces. When we talk about AI performance, we rush to discuss tensor cores, fused multiply-add units, or TOPS. But none of that works if data can’t flow in and out fast enough. And as models keep growing—moving from millions to billions of parameters—memory access is becoming the real bottleneck.

AI workloads are pushing silicon not just for raw performance but for bandwidth efficiency and power-aware data movement. And this is exactly where the Advanced eXtensible Interface—AXI—has become indispensable. It’s quietly becoming the standard for communication inside modern AI SoCs, connecting compute cores, DMA engines, memory controllers, and even chiplets.

And the best part? It does all this without forcing design trade-offs.

What Exactly Is AXI—and Why Does It Matter?

AXI is part of the AMBA (Advanced Microcontroller Bus Architecture) family, introduced with AMBA 3 and refined in AMBA 4. But unlike traditional buses, AXI is not just a set of wires between two blocks—it’s a fully pipelined, high-performance interconnect designed for modern SoCs.

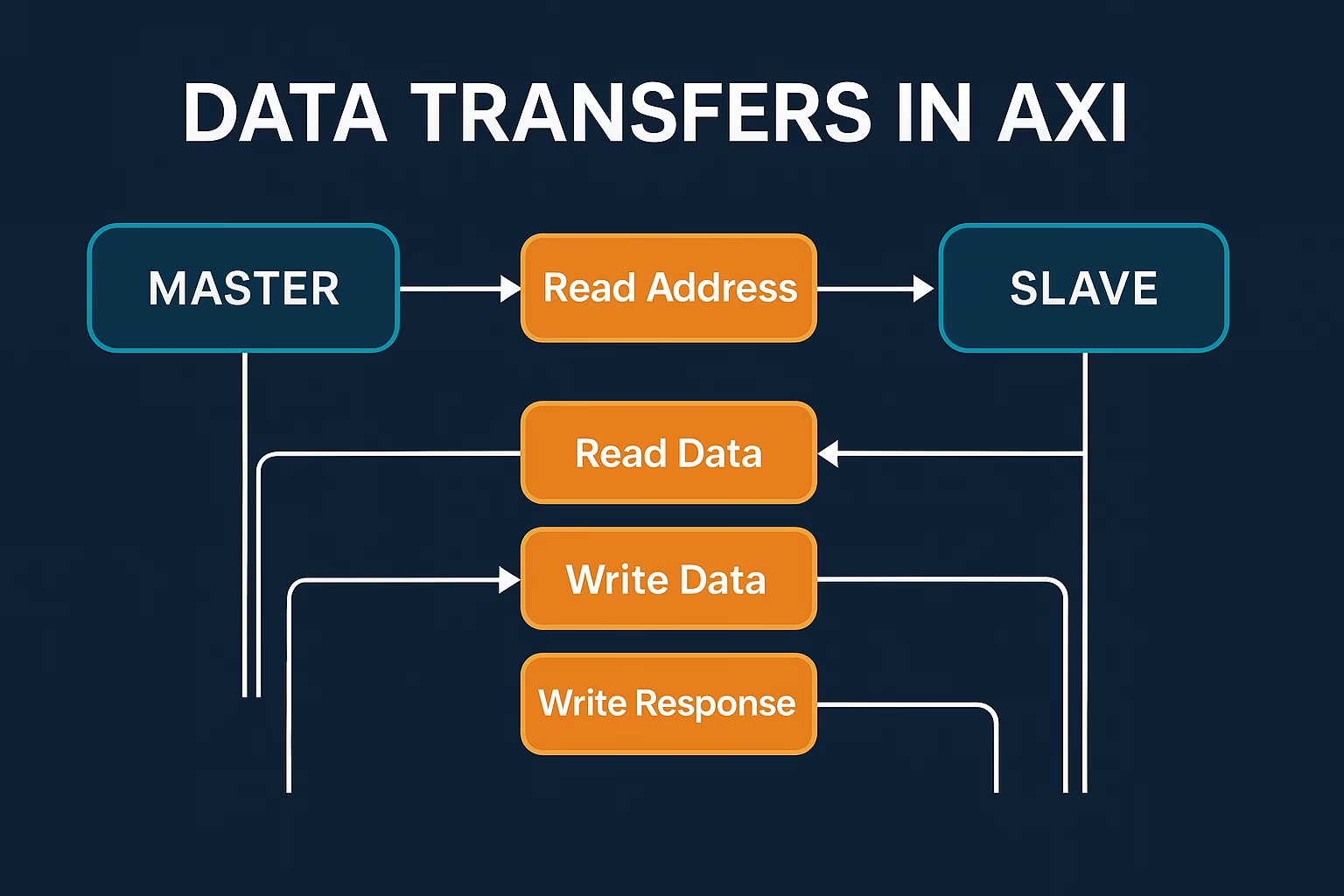

You can think of AXI as a system of independent channels, each doing a specific job:

- Read address

- Read data

- Write address

- Write data

- Write response

These separate read and write channels mean reads and writes don’t block each other, which is exactly what AI workloads need when multiple modules are accessing memory in parallel.

It also supports burst transfers, allowing large blocks of data—like tensors, weights, and feature maps—to be transferred quickly and efficiently.

And by decoupling the address, control, and data phases, AXI provides more flexible timing. This lets accelerators handle variable latencies and still keep the pipeline full—key for real-time AI inference.

In short, AXI matters because efficient communication is compute.

Why AXI When AHB Was Already There?

Before AXI, we were using AHB, part of AMBA 2. It was fine for simpler SoCs with a CPU, a DMA controller, and maybe one or two peripherals. But AI workloads exposed its limitations.

AHB used a shared bus—only one master could communicate at a time. No parallelism, limited pipelining, and full stalls when a slow response came in.

AXI fixed that with:

- Independent channels for address/data

- Parallel read/write paths

- Out-of-order support using transaction IDs

Modern SoCs need to keep many modules talking to memory at once—AXI allows that without the congestion. It doesn’t just improve speed—it scales with complexity.

AXI vs AHB — What Changed ?

| Feature | AHB (AMBA 2) | AXI (AMBA 3/4) |

|---|---|---|

| Architecture Type | Shared Bus | Decoupled Channels |

| Read/Write Parallelism | ❌ No | ✅ Yes |

| Out-of-Order Support | ❌ No | ✅ Yes |

| Burst Transfer Control | ✅ Basic | ✅ Advanced |

| Pipeline Depth | Limited | ✅ Deep |

| Multiple Master Support | Limited | ✅ Scalable |

| Ideal For | Simpler SoCs | AI, Graphics, Data-centric SoCs |

Why AI Accelerators Love AXI ?

AI accelerators are built for high-throughput, low-latency workloads—and AXI fits perfectly.

Here’s why:

- Multiple masters (compute cores, DMA engines, I/O) can talk to memory at the same time without stalling.

- Out-of-order support ensures that slow responses from one block don’t block others.

- Burst and pipelined transfers help move large blocks of data like activations and weights efficiently.

- Memory-mapped design means every component knows exactly how to talk to memory and I/O, without protocol conversions.

AXI is helping accelerators:

- Scale bandwidth as models grow

- Maintain performance without burning extra power

- Stay modular and easy to verify

It’s not just a good fit—it’s become the backbone.

AXI in Real-World AI Hardware

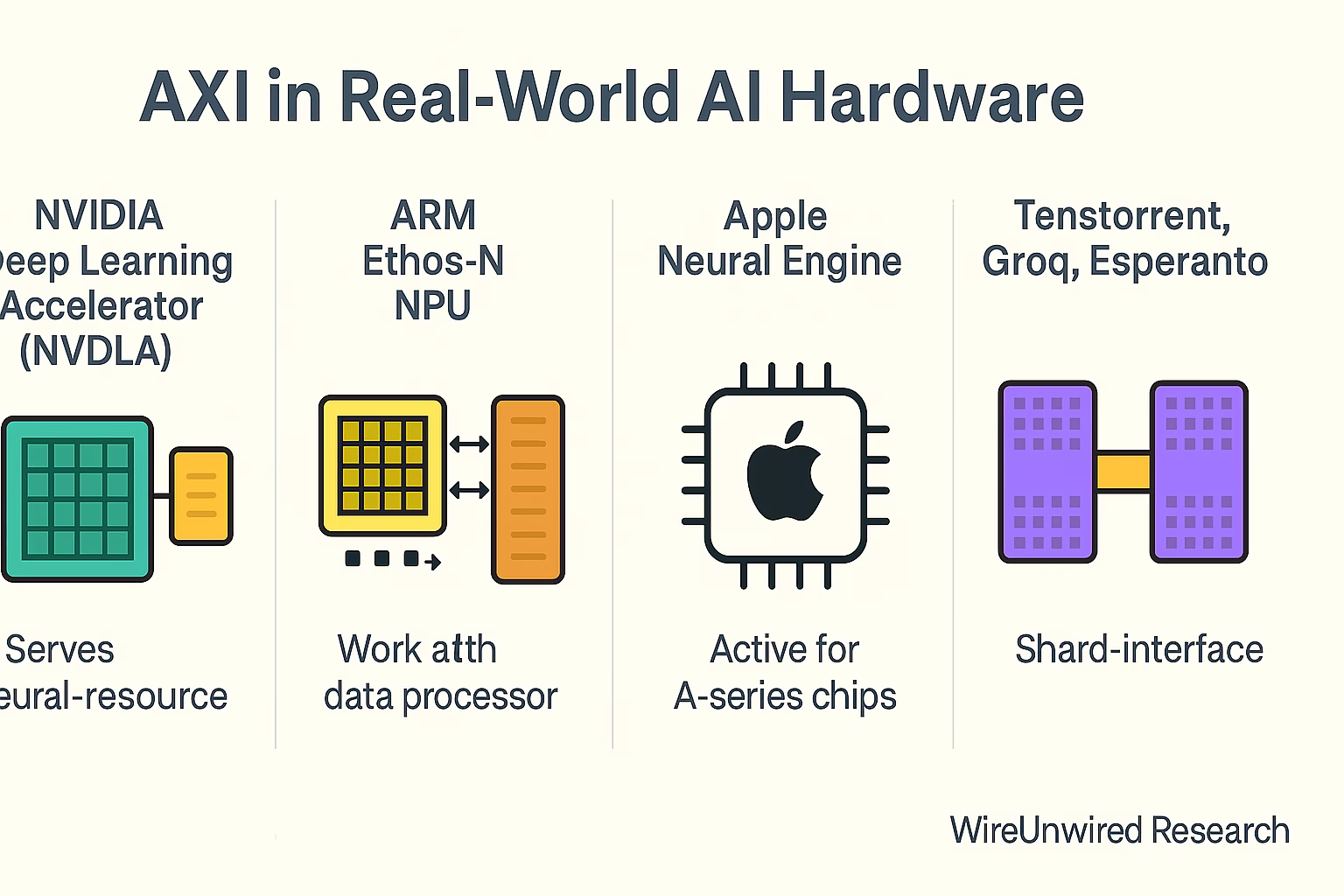

AXI isn’t just theory—it’s already inside most serious AI hardware:

- NVIDIA’s NVDLA: Uses AXI4 and AXI4-Stream for high-speed memory and dataflow between compute engines and memory.

- ARM’s Ethos-N: Relies on AXI to handle command, data, and weight streams in its edge inference pipeline.

- Apple Neural Engine: Though details are closed, teardowns suggest AXI-style interconnects inside A and M-series SoCs.

- Tenstorrent, Esperanto, Groq: Rely on AXI or custom variants to handle memory access and chiplet communication.

- FPGAs (Xilinx, Intel): Use AXI as the default interconnect in AI prototyping and IP reuse flows.

Everywhere I look—AXI is already part of the design flow

TRENDING

AXI4-Stream and AXI-Lite—Why Variants Matter

Different parts of an AI SoC have different needs. AXI handles this through its variants.

🔹 AXI4-Stream:

For raw data pipelines—like feature maps or video streams—AXI4-Stream skips addresses and responses. It’s just:

- Data in → Data out

Used heavily in convolution pipelines, DMA burst transfers, and inference stages. It’s simple, fast, and clean.

🔹 AXI-Lite:

For lightweight control—like writing registers or sending start/stop signals—AXI-Lite reduces overhead and complexity.

Together, these variants let designers:

- Use AXI4 for memory

- Use AXI4-Stream for data

- Use AXI-Lite for config

…all in one coherent architecture.

What’s Next for AXI in AI Hardware?

AI chips are moving toward chiplets, 3D stacking, and modular design. But even as the physical layout changes, AXI is staying relevant.

I’m seeing AXI being used:

- Between chiplets for internal memory and control

- Inside NoCs (Network-on-Chip) to support predictable routing

- Even across 3D-stacked silicon, using AXI-style packetized links

It’s not just surviving—it’s adapting.

AXI isn’t just a protocol—it’s part of the design language of modern AI silicon. And as compute keeps scaling, I believe AXI will keep powering what comes next.

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.