I still remember the first time I saw how computers add two numbers — and how painfully slow the process can be if we don’t think smart.

Let’s say you’re adding two 4-bit numbers:

A = 1 0 1 1

B = +0 1 1 0

We begin from the rightmost bit (Bit0). It’s simple: you add A0 and B0, maybe get a carry. Then you move to Bit1. But wait — Bit1 can’t do anything until it knows if Bit0 generated a carry.

Then Bit2 waits on Bit1.

Bit3 waits on Bit2.

And it goes on…

In this setup, each bit waits for the result of the previous one — like kids in a lunch line waiting for the first tray to move.

This is the ripple-carry adder, and it’s how addition has traditionally worked in hardware. The delay stacks up. If you’re building a 64-bit CPU, it means your last bit must wait through 64 steps before it can compute. That’s O(n) delay — and in digital logic, that’s a real bottleneck.

We’ve built rockets and robots, but we’re still letting bits wait in line?

That’s where the carry lookahead adder comes in — it says: “What if we stop waiting altogether?”

What Carry Lookahead Solves ?

If ripple-carry is a line of kids waiting for lunch, then carry lookahead is the teacher who says — “I already know who’s going to pass the tray, so let’s just get the food to everyone now.”

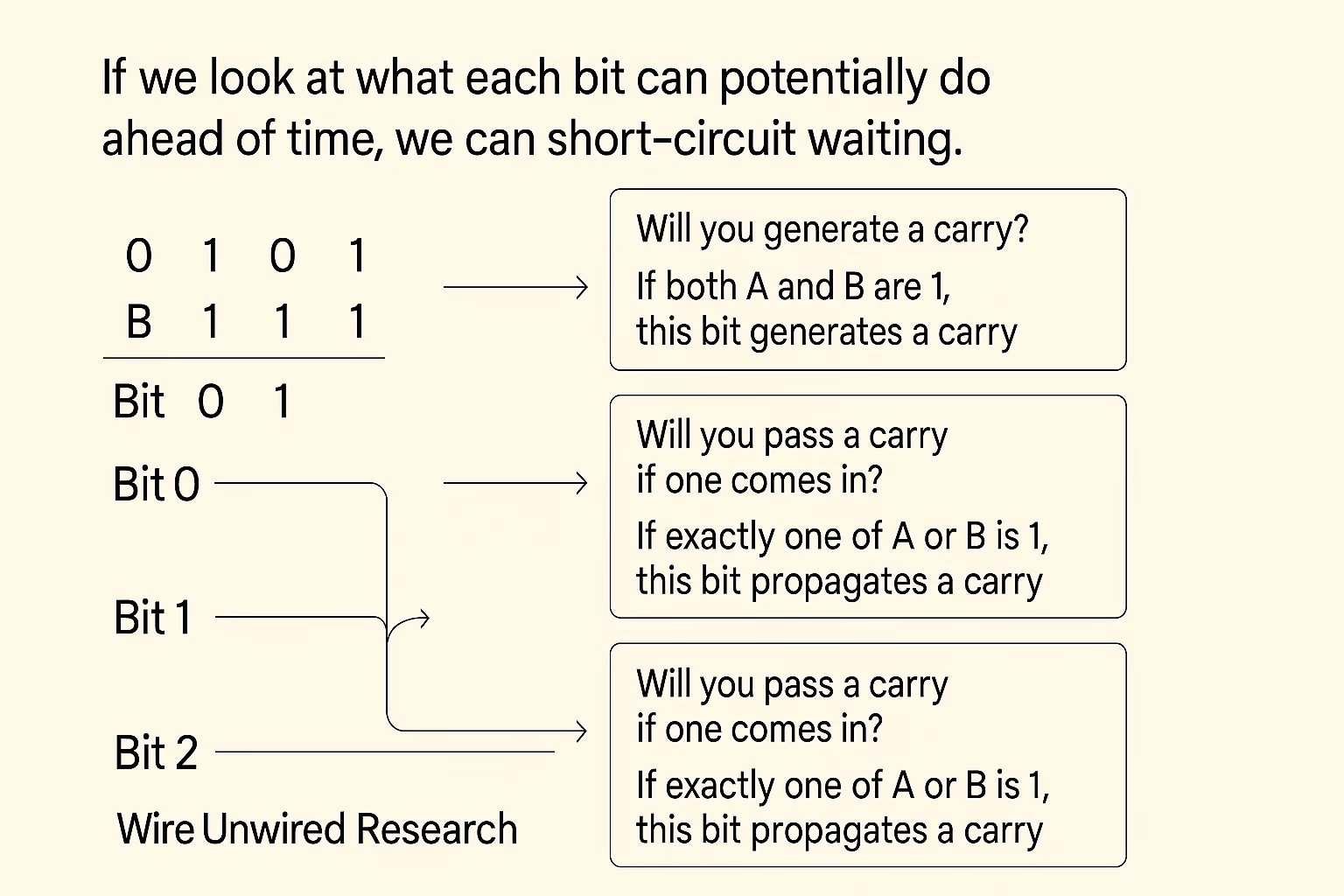

In ripple-carry, each bit needs to wait for the carry-in from the bit before it. But if we look at what each bit can *potentially* do ahead of time, we can short-circuit this waiting.

Here’s the trick:

We ask two simple questions at each bit:

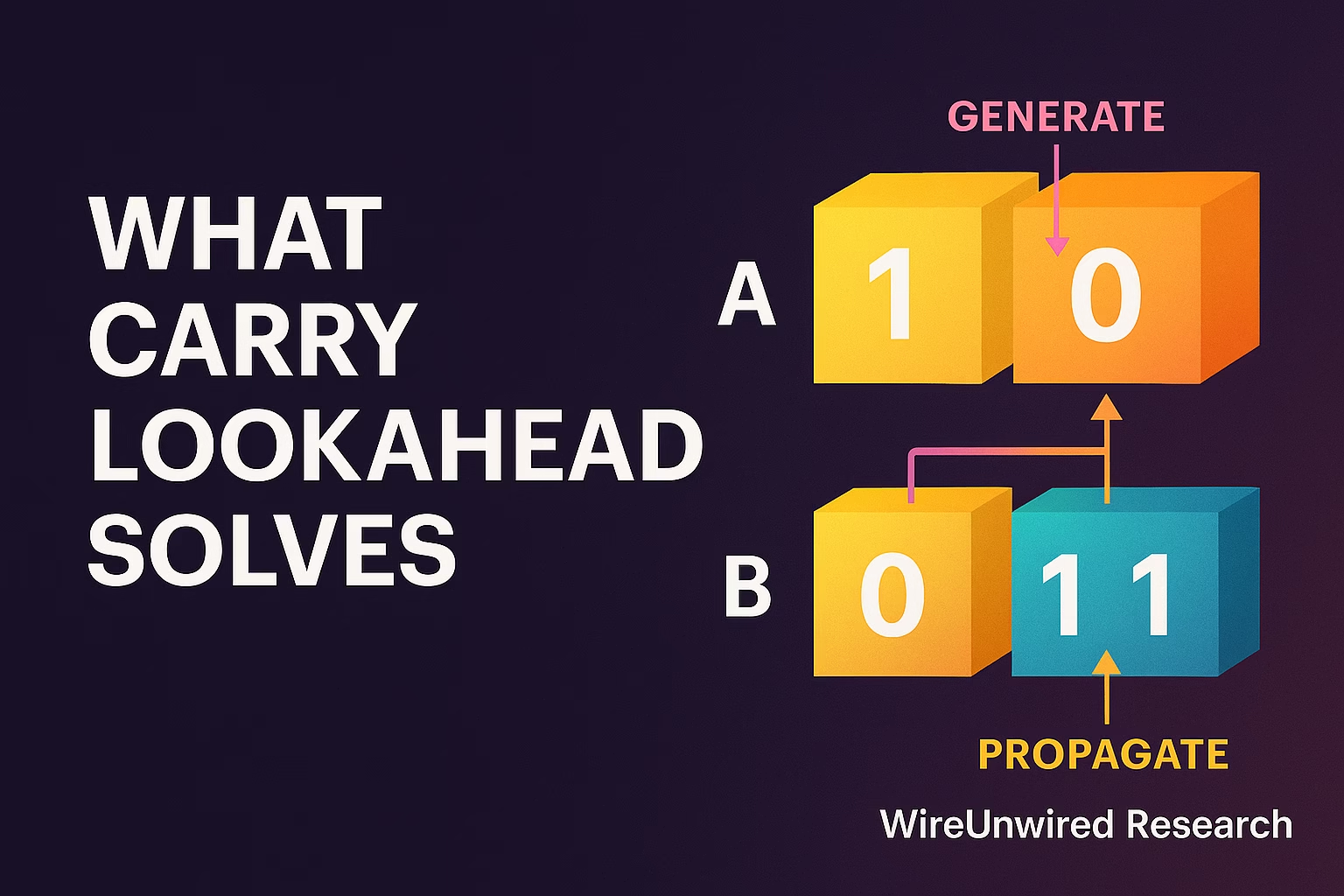

1. Will you generate a carry?

→ If both A and B are 1, a carry is guaranteed. We call this a Generate (G).

2. Will you pass a carry if one comes in?

→ If exactly one of A or B is 1, this bit is willing to pass it on. This is called a Propagate (P).

That’s all. Every bit answers just these two things:

1)Do I make a carry?

2)Do I pass a carry?

From that, we can predict exactly which bits will get a carry without waiting for the chain reaction.

It’s like giving every kid in the line a walkie-talkie. Instead of waiting for the person in front to finish, you already know:

1) Who’s passing the message

2) Where the message is going

3) And who doesn’t need to wait at all

And in digital logic, that’s gold.

The General Carry Lookahead Approach (Before Trees)

Once we know that each bit can either generate or propagate a carry, we try to outsmart the ripple delay by calculating all the carries ahead of time — just from the inputs.

Let’s take a 4-bit example to keep things simple. We define:

G[i] = A[i] & B[i] → this bit generates a carry

P[i] = A[i] ^ B[i] → this bit propagates a carry

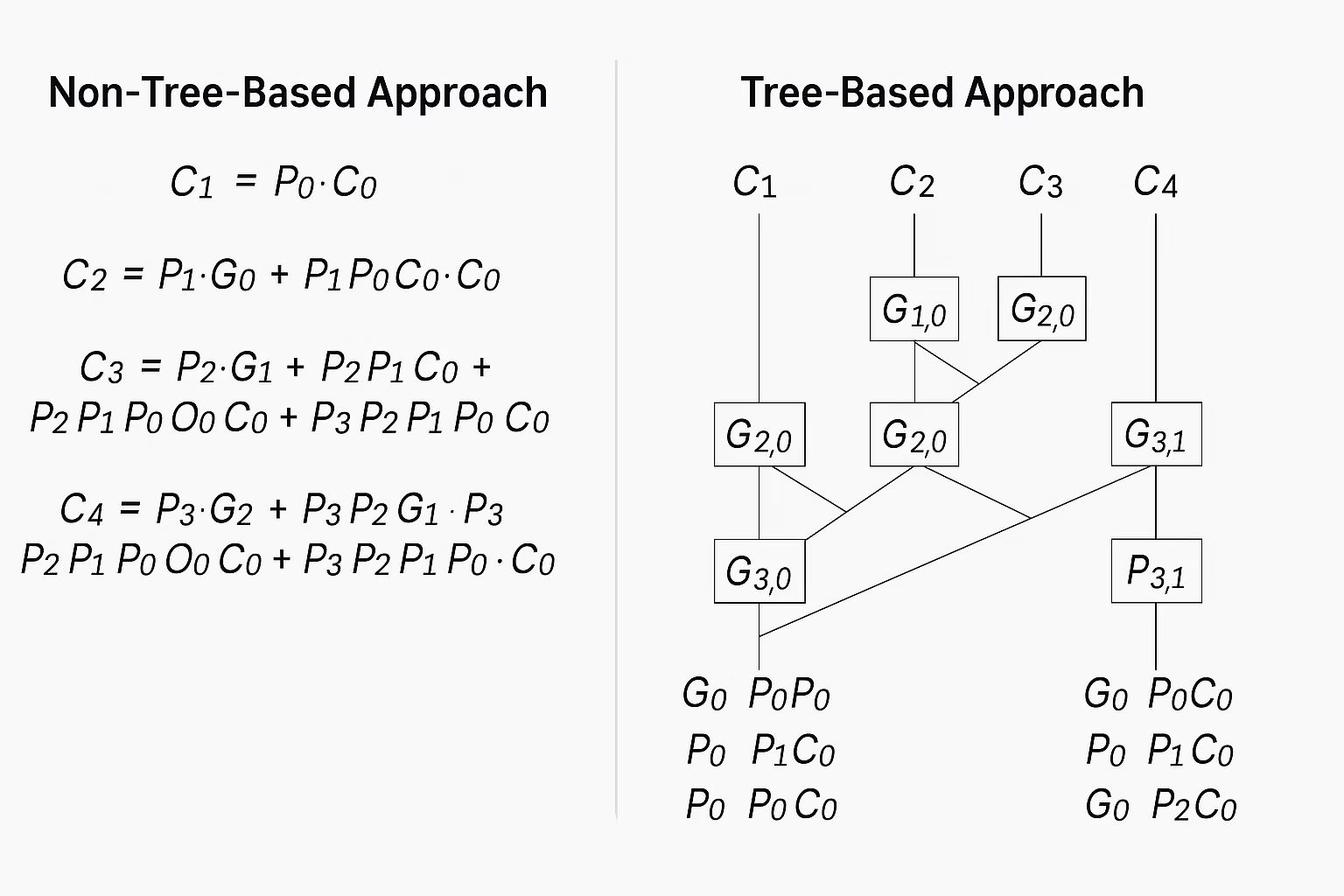

So now, we try to compute each carry input (C1, C2, C3, C4) without waiting. We do that by expanding the logic forward:

C1 = G0 + P0·C0

C2 = G1 + P1·G0 + P1·P0·C0

C3 = G2 + P2·G1 + P2·P1·G0 + P2·P1·P0·C0

C4 = G3 + P3·G2 + P3·P2·G1 + P3·P2·P1·G0 + P3·P2·P1·P0·C0

It looks clever — and it is. We’re not waiting on intermediate carries anymore. We’re predicting what the carries will be by using only the initial inputs and C0.

This approach cuts through the ripple delay and brings down the addition time from O(n) to something closer to O(log n) in theory. But there’s a problem.

Every new carry involves more terms:

1)C1 uses 2 signals

2)C2 uses 4 signals

3)C3 uses 8 signals

4)C4 already has 16 terms if you expand everything

And in hardware, more terms = more gates = more delay.

Even though it’s not a ripple, we’ve just created a monster logic equation that eats up area and time.

For small adders like 4-bit or 8-bit, this works fine. But in a modern CPU, you’re dealing with 64-bit or 128-bit additions. Try doing the above for 64 bits — and you’ll quickly hit a wall.

That’s where we need a smarter trick — not just lookahead, but structured lookahead, using grouping and reuse.

And that brings us to the tree.

Grouping Like a Genius — Building Blocks of G/P

The general carry lookahead logic showed us a way out of the ripple mess — but we ran into a new problem: growing complexity.

Each carry like C4 ends up with a huge expression. More gates, more delay, and very soon, your so-called “lookahead” becomes just another bottleneck.

So we try something smarter. We group the generate (G) and propagate (P) signals — not just to save space, but to build reusable logic blocks.

Let’s take a step-by-step example with 4 bits.

👷 Step 1: Define per-bit G and P

These are the starting points:

G0, P0 — for Bit0

G1, P1 — for Bit1

G2, P2 — for Bit2

G3, P3 — for Bit3

Step 2: Group G and P across 2 bits

We now define grouped G and P, like this:

G1_0 = G1 + P1·G0

P1_0 = P1·P0

What does this mean?

G1_0: the group from Bit0 to Bit1 can generate a carry if either:

- Bit1 generates one directly, or

- Bit1 passes a carry from Bit0, which generated one

P1_0: this group can propagate a carry if both Bit1 and Bit0 are willing to pass it along

Same way, we group:

- G2_1 = G2 + P2·G1

- P2_1 = P2·P1

- G3_2 = G3 + P3·G2

- P3_2 = P3·P2

Now we’ve covered 1-bit distance groupings. What next?

🌉 Step 3: Group across 2-bit blocks

Let’s now reuse what we built:

- G2_0 = G2_1 + P2_1·G0

- P2_0 = P2_1·P0

- G3_1 = G3_2 + P3_2·G1_0

- P3_1 = P3_2·P1_0

At this point, G2_0 tells us:

“Can bits 0–2 together generate a carry?”

And G3_1 tells us:

“Can bits 1–3 together generate a carry?”

Also Read :What Is AMBA? A Simple Guide to Advanced Microcontroller Bus Architecture for SoC Designers

🧠 Why Grouping Works

Instead of repeating huge expressions like:

C4 = G3 + P3·G2 + P3·P2·G1 + P3·P2·P1·G0 + P3·P2·P1·P0·C0

We write:

C4 = G3_1 + P3_1·C0

All the complexity is already handled by the groupings.

Each level of grouped G/P builds on the previous.

No repetition. No explosion of terms.

✅ The logic becomes modular

✅ Easy to scale

✅ Ready for tree-based carry computation

This is the real genius behind carry lookahead — it’s not just that we predict carries.

It’s that we do it without duplicating effort.

The Carry Tree — All Carries in log₂(n) Time

At this point, we’ve built the idea of grouping — smaller pairs of bits forming larger blocks that help us figure out where the carry goes. But there’s still one problem left: time.

Even with grouping, if we compute G3_1 and P3_1 after computing G1_0, P1_0, G3_2, etc., we still follow a sequence. The logic is smarter, but it’s still stacked one after the other.

That’s where the tree comes in — and it completely changes the game.

🌲 Why a Tree?

Imagine you’re adding 4 bits:

A = a3 a2 a1 a0

B = b3 b2 b1 b0

To get C1, C2, C3, C4, you could either:

- Wait for each carry to be ready (ripple style)

- Or compute everything in parallel, using a tree of grouped G/P logic

The tree-based structure works like this:

- Stage 0: Every bit computes its own

G[i]andP[i] - Stage 1: Combine every pair (1-bit distance) →

G1_0,G2_1,G3_2 - Stage 2: Combine across 2-bit distance →

G2_0,G3_1

In each stage, the distance we can “see” doubles.

| Stage | Distance | Who Looks Back How Far |

|---|---|---|

| 0 | 0 | G0, G1, G2, G3 |

| 1 | 1-bit | G1_0, G2_1, G3_2 |

| 2 | 2-bit | G2_0, G3_1 |

So in just 2 stages, we’ve computed everything we need to get:

C1 = G0 + P0·C0

C2 = G1_0 + P1_0·C0

C3 = G2_0 + P2_0·C0

C4 = G3_1 + P3_1·C0

Each carry is now available at the same time — because all the logic is happening in parallel across the tree.

⚡ What We Gained

Let’s compare delays:

| Adder Type | Time Complexity | How Carries Are Computed |

|---|---|---|

| Ripple Carry | O(n) | One by one, waiting for previous carry |

| General CLA | O(log n) (wider) | Computed ahead, but expressions grow |

| Tree CLA (Kogge-Stone) | O(log n) (balanced) | Computed in parallel using grouped logic |

In the tree, we do a few levels of reused grouped G/P logic — each stage handling double the distance. That’s why a 64-bit CLA can finish in just 6 stages (log₂64 = 6). For 128-bit? Only 7.

✅ We broke the waiting chain

✅ We controlled complexity

✅ And we unlocked the real power of parallelism

Why It Matters in Modern Chips

We’re not building these adders just to admire them — they sit at the heart of every modern processor. Whether it’s a smartphone SoC, a cloud data center CPU, or an AI accelerator chip, addition is everywhere — addresses, arithmetic, counters, indexing.

And the faster we can do addition, the faster everything else moves.

🏎️ When Every Nanosecond Counts

In hardware, time is not just money — it’s power, performance, and heat. Every extra gate a signal passes through adds delay and energy cost. Ripple-carry adds delay linearly with bit-width. General carry lookahead improves that — but tree-based CLA is where we get true performance.

Let’s say your processor has a 64-bit ALU. Without carry lookahead, you’d need 64 steps to compute a single sum. With a Kogge-Stone tree, you get all the carries in just 6 logic stages.

That’s not just speed — it’s predictability. It enables deep pipelining, consistent timing, and efficient instruction scheduling.

⚙️ Where You’ll Find It

Tree-based carry logic like Kogge-Stone or Brent-Kung is used in:

- High-performance CPU cores (Intel, AMD, Apple)

- DSP blocks in FPGAs

- Cryptographic accelerators

- Vector ALUs in AI chips

Even if the full tree isn’t used, variants like sparse trees or hybrid adders still borrow the concept of grouped and parallel carry generation.

📏 Trade-offs Still Exist

Tree-based CLAs are fast, but they take up more silicon. More wires, more logic blocks. That’s why in some low-power or resource-constrained designs, engineers still choose ripple or simpler lookahead methods.

But when performance is critical — when you’re pushing GHz boundaries — the carry tree is one of the most optimized, elegant paths to speed.

Wrap-Up — Carry Without the Wait

For something as simple as addition, it’s incredible how deep the engineering goes. From ripple-carry to full-fledged tree-based structures, the goal remains the same: don’t make the bits wait.

What started as a problem of sequential delay turned into a beautifully layered solution — first by understanding generate and propagate, then by grouping, and finally by building a logic tree where all paths converge in log₂(n) time.

It’s more than just faster math. It’s a mindset:

Don’t repeat. Don’t wait. Reuse and scale.

These principles aren’t limited to adders — they shape how we design hardware, optimize logic, and build faster, better chips every day.

And it all begins with the humble carry.

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.

You neglect to mention Manchester series prefix. A vs B prefix some switches, then Carry Propagates through or gets replaced by Annihilate or Generate. In either case, without any switch waiting to know what carry might do. 4way MUX such as 74CBT3253 has an internal decoder that applies all necessary prefixes to 5 ohm switches.