⚡

WireUnwired Research • Key Insights

- Breaking News: A live data blackout exposes how fragile many fintech research workflows still are when core feeds fail.

- Impact: Risk models, trading dashboards, and compliance checks can all misfire when research tools silently fall back to stale or missing data.

- The Numbers: Billions in assets rely on real‑time fintech APIs every trading day, amplifying the systemic risk of even brief research errors.

A simple message — “Could not retrieve live data at this time” — is more than a minor glitch in a fintech terminal. It is a red flag that the data supply chain behind trading, lending, and risk analytics has hit a hard stop. In a world where milliseconds shape market moves, a research error on fintech can cascade into mispricing, bad risk calls, and regulatory blind spots.

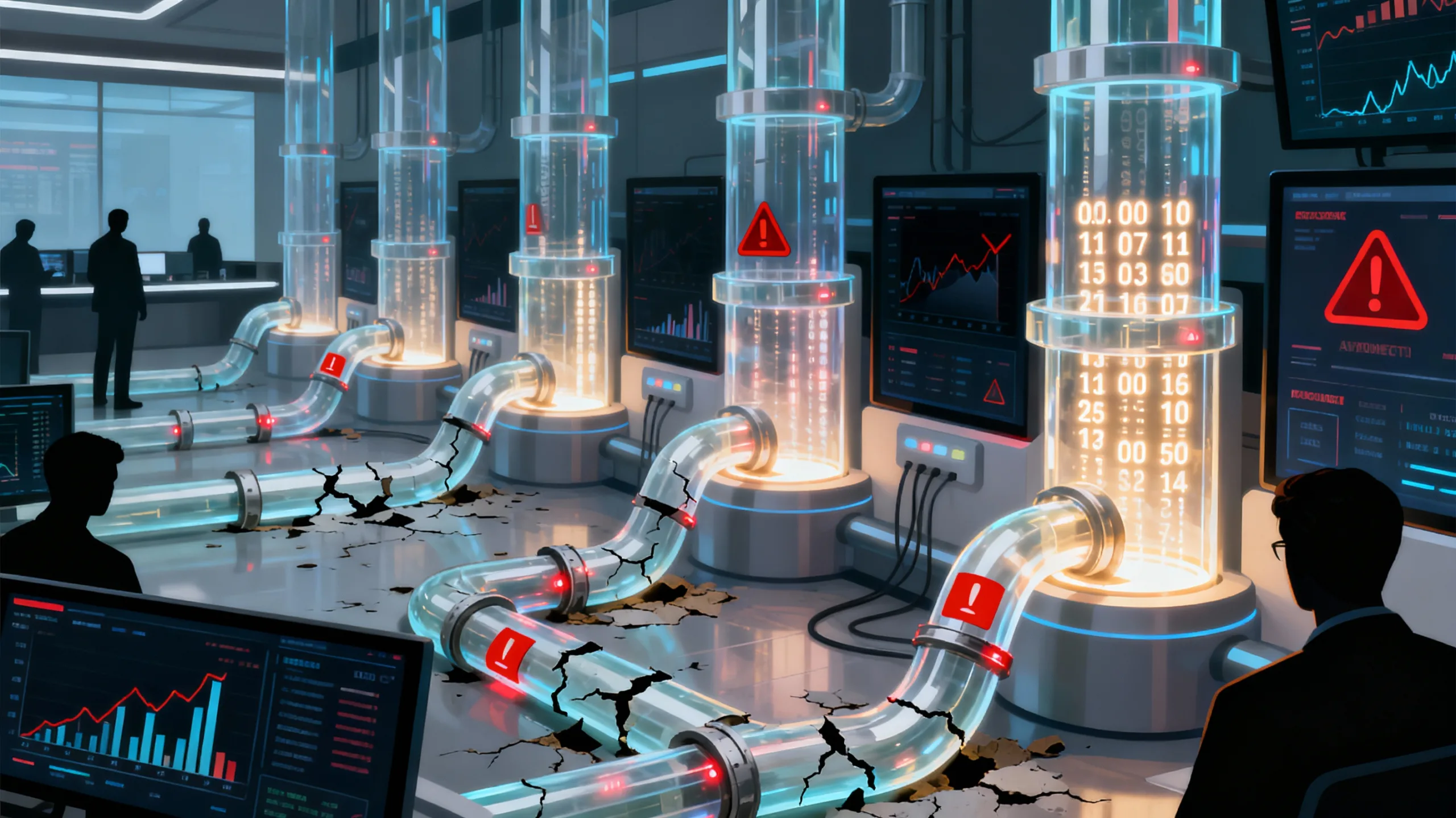

Most fintech platforms sit on a mesh of upstream data sources: exchanges, payment processors, banking APIs, credit bureaus, alternative data vendors. When any of these fail, research tools often degrade quietly rather than loudly, swapping live feeds for cached snapshots or partial datasets without clear disclosure to the end‑user. That opacity turns a technical fault into an information‑integrity problem.

How fintech data pipelines break ?

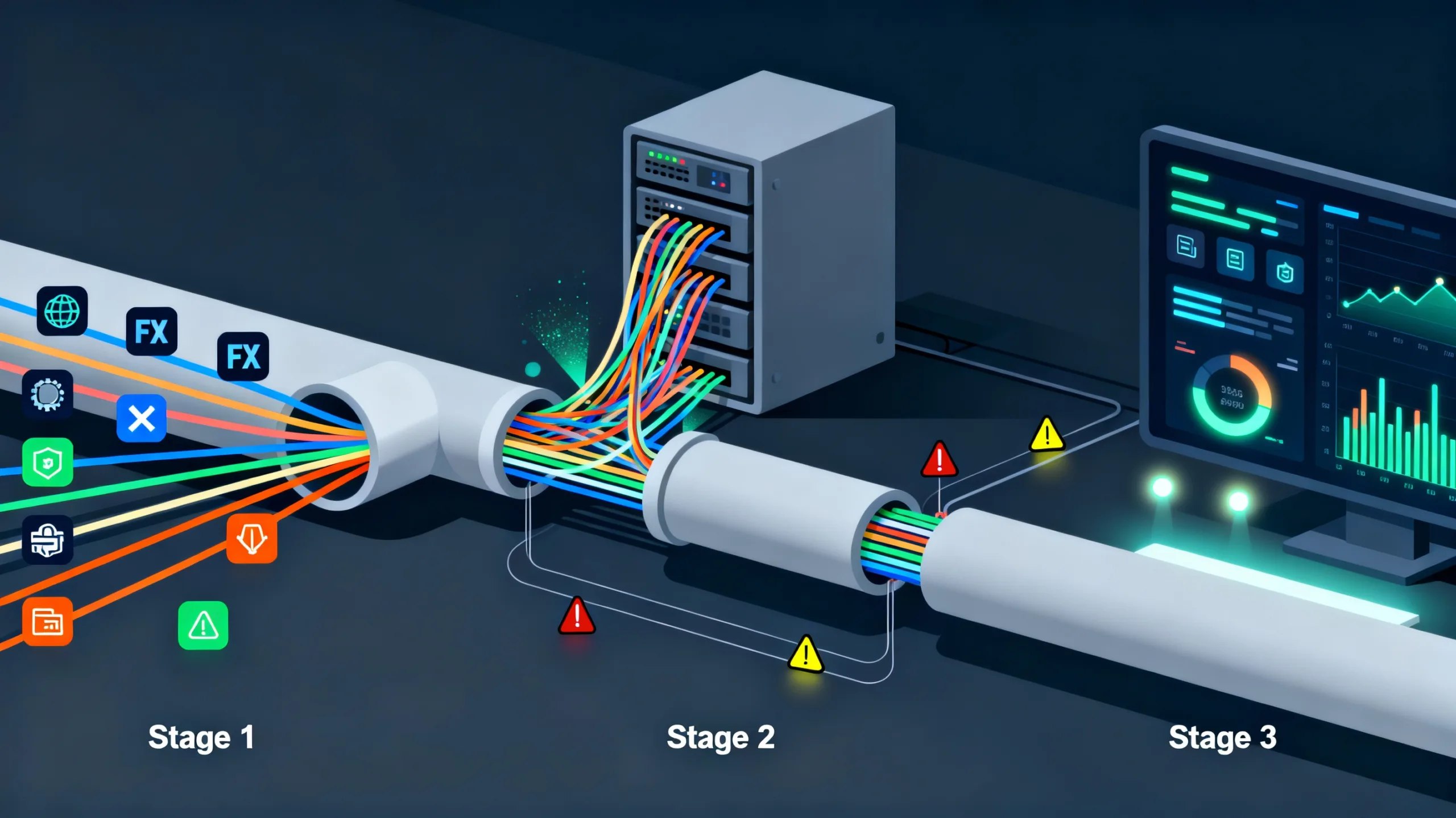

Under the hood, a modern fintech data stack is a sequence of fragile dependencies. Ingestion services pull in tick data, transaction streams, FX rates, on‑chain metrics, or credit events. Normalization layers then clean, deduplicate, and reconcile those feeds into a common schema. Analytics engines and dashboards finally sit on top, assuming that every number beneath them is fresh and trustworthy.

Research errors surface when any stage of that pipeline fails. An upstream exchange API rate‑limits a feed. A banking partner changes an authentication flow. A data vendor pushes malformed records. Or the analytics layer itself hits latency thresholds and times out. The result at the user interface is deceptively simple: a blank chart, a missing series, or a generic error that hides a complex failure chain.

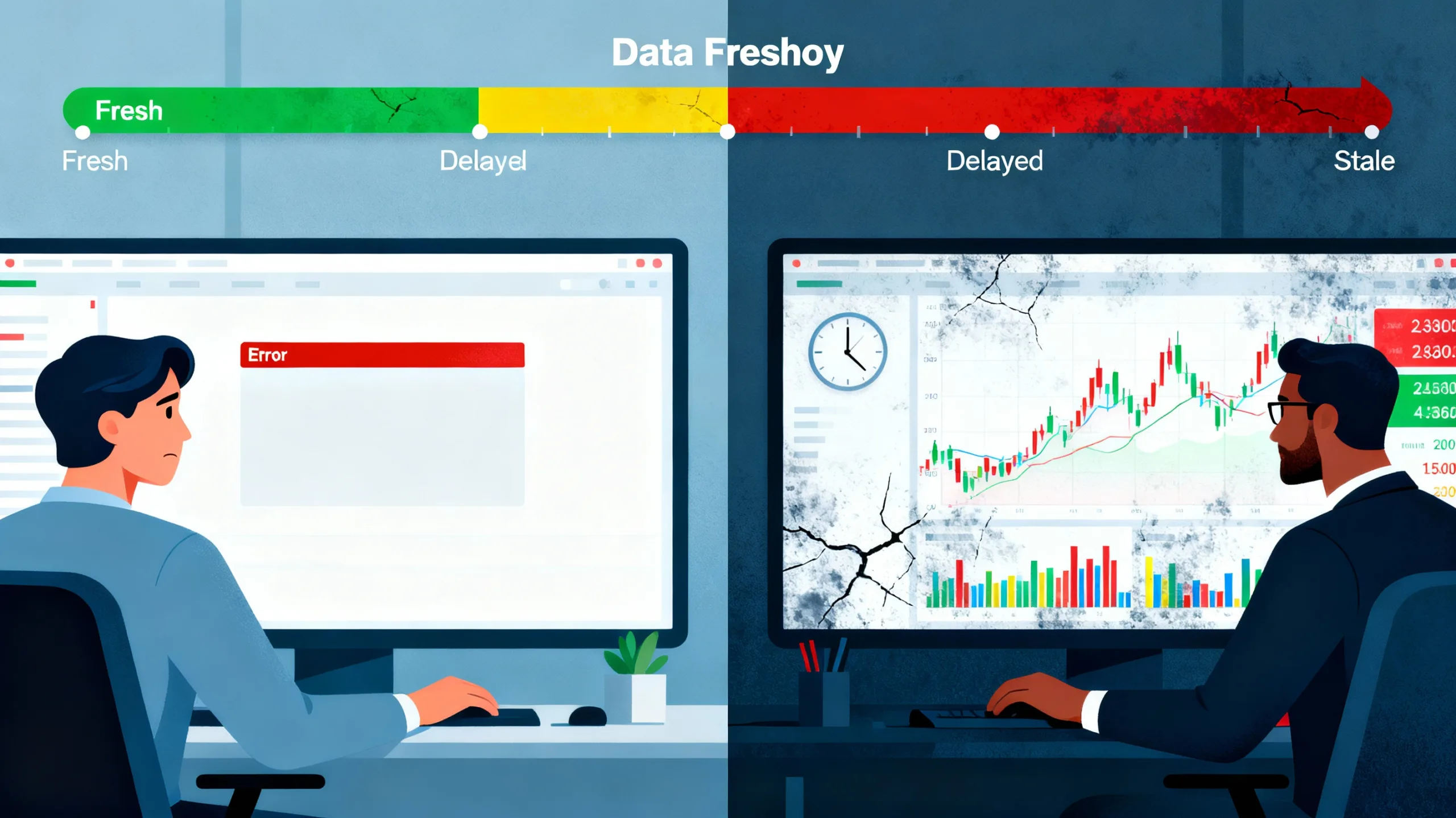

Stale data is worse than no data.

No data with a clear error banner is inconvenient. Stale data masquerading as live is dangerous. When a research platform cannot retrieve live data but continues to display old figures without explicit timestamping, every chart and metric becomes a potential liability. Trading decisions, credit approvals, and treasury actions may be taken on conditions that no longer exist.

This is why robust fintech tooling treats data timeliness as a first‑class concept. Every data point needs an explicit freshness contract: how frequently it should update, what happens if it does not, and how the UI signals that gap to the analyst. Without that discipline, even short outages can poison backtests, distort machine‑learning models, and skew risk reports long after the underlying issue is fixed.

Resilience patterns for live research

Engineering teams have a well‑tested playbook for hardening fintech research systems against these failures. At the infrastructure level, multi‑region deployments and redundant network paths reduce the odds of a single‑point outage taking down data flows. Multiple independent data vendors for critical asset classes provide a failover option when one provider degrades or changes its format unexpectedly.

At the application layer, circuit breakers and backoff strategies prevent a failing upstream from cascading congestion throughout the platform. Transparent fallbacks are crucial: instead of silently switching to cached data, systems should clearly mark that the view is historical, limited, or simulated. Automated health checks and synthetic probes can continuously test research endpoints and alert teams before users feel the impact.

Risk, compliance, and model drift

Fintech firms increasingly run high‑stakes workloads directly on top of research data: real‑time risk scoring, fraud detection, margin management, and automated portfolio rebalancing. When live data disappears, these systems either halt or, worse, extrapolate from a world that stopped updating a few minutes or hours ago. That is a textbook recipe for model drift and hidden exposure.

Regulators are also sharpening their focus on data governance across digital finance. Firms are expected to know not just what their models output, but which data feeds drove those decisions and how resilient those pipelines are. Documented incident playbooks, immutable audit trails, and clear client communications are no longer nice‑to‑have. They are becoming baseline expectations as supervisory pressure on algorithmic decision‑making grows.

Designing for graceful failure

Good fintech UX anticipates failure rather than assuming perfect uptime. That means clear messaging when research data cannot be retrieved, with specific information on what is affected: tick‑by‑tick prices, order‑book depth, settlement data, or corporate actions. It also means offering analysts structured fallbacks, such as last‑known‑good snapshots or alternative valuation models, while keeping those options clearly differentiated from live feeds.

Graceful failure also extends to user workflows. Analysts need workflows that automatically flag reports or models created during a data outage so downstream consumers understand the context. Scheduled jobs and automated exports should be able to pause or mark outputs as partial, instead of silently pushing corrupted or incomplete datasets into production systems.

Why observability matters more than ever

Observability is the difference between a brief research blip and a systemic fintech incident. Rich telemetry on data latency, error rates, schema changes, and vendor health lets teams see trouble before users do. Dashboards that track “time since last update” for each critical feed help quantify real‑time exposure to data freshness risk.

As more capital and compliance functions depend on these platforms, organizations are starting to treat data quality SLAs as seriously as they treat uptime SLAs. That shift requires cross‑functional cooperation between data engineering, security, compliance, and front‑office teams. It also demands candid communication with clients about what happens when research tools lose their live view of the market.

Building a better research culture

Ultimately, a research error on a fintech platform is not just a technical bug. It is a stress test of an organization’s culture around data, transparency, and risk.

Teams that treat outages as learning opportunities improve their incident response, refine their monitoring, and strengthen their contracts with data vendors over time. Teams that minimize or obscure issues invite larger problems later.

For practitioners who want to stay ahead of these challenges, joining focused communities of builders and analysts is becoming essential. WireUnwired Research runs active groups on both WhatsApp and LinkedIn, where practitioners share playbooks and post‑mortems on exactly these kinds of data‑integrity incidents; you can request access via WhatsApp at this invite link or follow the team on LinkedIn at the official WireUnwired Research page.

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.