I often see young engineers asking — why do we even need something like AMBA? Isn’t a microcontroller self-sufficient? Well, it’s not that simple.

A microcontroller is essentially a compact computer. It sits on a single VLSI chip and includes a processor core, memory blocks, and peripherals like timers, ADCs, and USB interfaces. But here’s the thing — all these blocks can’t just exist in isolation. They need to talk to each other efficiently, and that’s where a bus architecture comes in.

Traditionally, this includes:

- Address Bus

- Data Bus

- Control Bus

But when you’re working with more complex SoCs — which include not just microcontrollers but memory, DSPs, and interfaces like I2C and SPI — the need for a standardized, scalable on-chip communication becomes obvious.

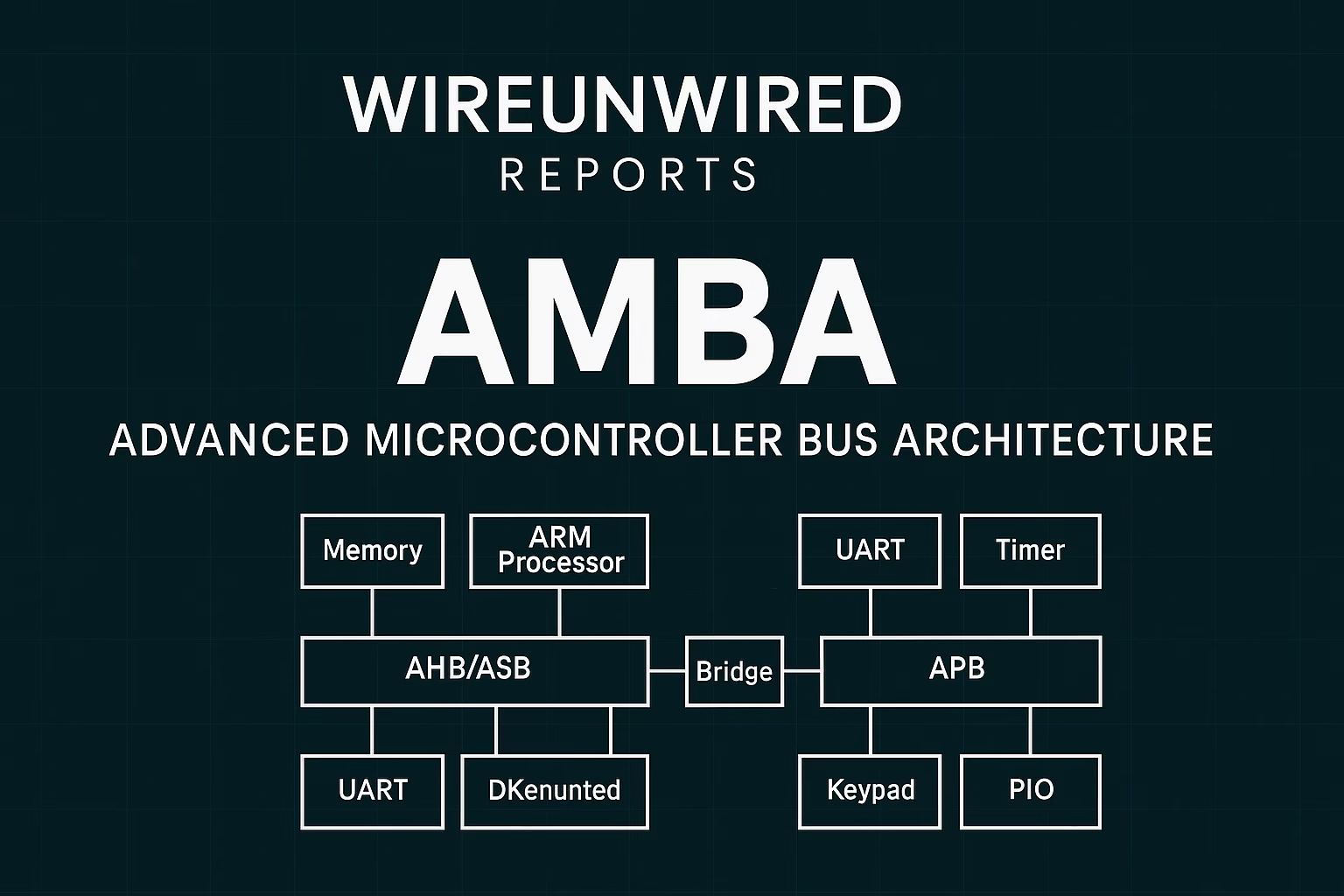

That’s exactly where AMBA (Advanced Microcontroller Bus Architecture) steps in.

What AMBA Really Is ?

Developed by ARM, AMBA is now the de facto on-chip interconnect standard for SoCs. I think of it as the common language that lets every IP block — whether high-speed memory or a low-power timer — communicate seamlessly inside a chip.

Rather than reinventing the bus every time you design a chip, AMBA lets you plug and play. It reduces wiring complexity, optimizes silicon usage, and accelerates the whole integration process.

AMBA isn’t just one bus — it’s a set of protocols designed for different needs:

Source :Radha Kulkarni

| Protocol | Use Case |

|---|---|

| AHB | High-performance, high-bandwidth masters/slaves |

| ASB | Legacy systems; simplified version of AHB |

| APB | Low-bandwidth, low-power peripherals |

| AXI | High-speed, highly flexible (used in AI accelerators) |

| ATB | For real-time trace and debug signals |

Let’s break these down further.

How APB Handles Low-Power, Low-Bandwidth Communication ?

Not every part of a chip needs to run fast. Some just need to run efficiently.

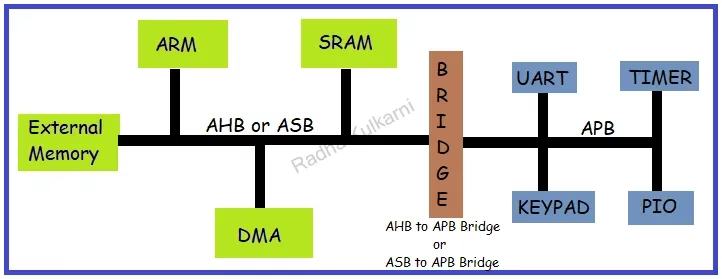

That’s where APB (Advanced Peripheral Bus) comes in. In an SoC, where AHB or AXI might be handling memory or CPU, APB quietly handles the low-bandwidth, low-power peripherals — things like timers, UARTs, keypads, and GPIOs.

I think of APB as the calm, quiet worker in the background — predictable, straightforward, and incredibly power-efficient.

In APB, there’s a bridge that connects it to the high-performance AHB/ASB buses. That bridge plays the role of a master, and the peripherals are always slaves. Unlike AXI or AHB, APB doesn’t support complex transfers — no bursts, no out-of-order execution. And that simplicity is what makes it perfect for low-energy blocks.

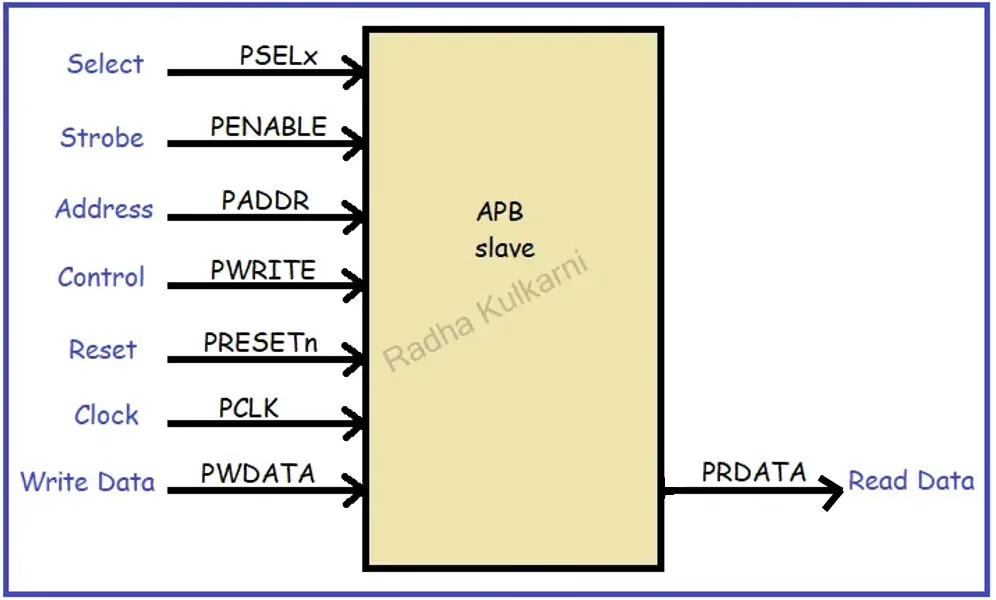

Key Signals in APB

Let’s break down the important signals that make APB tick:

| Signal | Function |

|---|---|

| PCLK | Clock for the APB domain |

| PRESETn | Active-low reset |

| PADDR | 32-bit address bus for selecting registers |

| PWRITE | Write (1) or Read (0) signal |

| PWDATA | Data bus for write operations |

| PRDATA | Data bus for read operations |

| PSELx | Slave select signal (x = number of slaves) |

| PENABLE | Enables access phase |

| PREADY | Indicates peripheral is ready to respond |

All transfers in APB follow a fixed 3-phase cycle. Let’s look at them now.

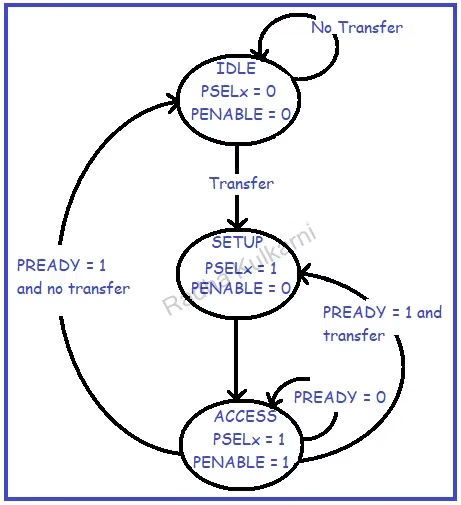

APB Finite State Machine (FSM)

APB works in three clean states. It doesn’t overcomplicate things.

| State | What Happens |

|---|---|

| IDLE | Default state, no activity, PENABLE = 0 |

| SETUP | Target peripheral selected, PSELx = 1, PENABLE = 0 |

| ACCESS | Data read/write begins, PENABLE = 1, wait on PREADY |

Here’s how I like to explain the flow:

IDLE: No operation. Reset leads here. Nothing’s moving.

SETUP: The controller sets the address, direction (read/write), and selects the peripheral.

ACCESS: The data transfer actually happens. If PREADY = 0, APB waits here. If PREADY = 1, transfer completes.

APB Write Timing – No Wait State

When everything goes smoothly:

T0–T1: IDLE

T1–T2: SETUP → PSEL = 1, PWRITE = 1

T2–T3: ACCESS → PENABLE = 1, PREADY = 1 → WRITE done

That’s a basic APB write. Data is written from PWDATA to the register at PADDR in one clean ACCESS cycle.

APB Write Timing – With Wait State

Sometimes, the peripheral isn’t ready:

T0–T1: IDLE

T1–T2: SETUP

T2–T3: ACCESS → PREADY = 0 → wait

T3–T4: ACCESS (continued) → PREADY = 1 → WRITE completes

This wait is common with slower peripherals or shared resources. APB handles it without fuss.

AHB & ASB: The Highways of On-Chip Communication

When you’re dealing with high-speed memory or processor cores, APB just doesn’t cut it. That’s when AMBA’s high-performance protocols — AHB (Advanced High-Performance Bus) and ASB (Advanced System Bus) — take over.

In most SoCs today, AHB is doing the heavy lifting. It connects high-bandwidth blocks like processors, DMA controllers, caches, and external memory interfaces. If APB is the narrow city road, AHB is the multi-lane highway — optimized for speed, concurrency, and efficiency.

What Makes AHB Stand Out ?

I often see AHB in designs where latency is critical and data volumes are large. It supports multiple masters and multiple slaves — meaning you can have a CPU, a DMA, and another accelerator all trying to access memory, at the same time.

Let’s list some AHB capabilities:

| Feature | Description |

|---|---|

| Burst Transfers | Efficient block data moves |

| Split Transactions | Non-blocking transfers with handshakes |

| Single-Cycle Bus Handover | Zero idle cycles between master switches |

| 32/64/128-bit Data Support | Scales with design complexity |

| Clock-Synchronized | One rising edge = one event |

And AHB isn’t just about speed — it’s also about control. It uses an arbiter to resolve access when multiple masters compete for the bus.

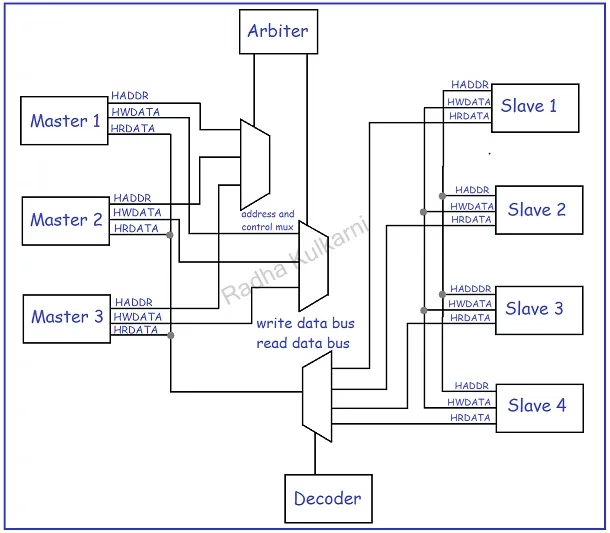

What the AHB Architecture Looks Like ?

Here’s how a typical AHB setup functions:

- Masters: CPUs, DMAs

- Slaves: SRAM, peripherals, Flash

- HADDR: Address line used to choose the target slave

- HWDATA / HRDATA: Write and read data lines

- Arbiter: Manages who gets bus access

- Decoder: Decodes HADDR and activates the correct HSELx signal

Other critical control signals:

| Signal | Role |

|---|---|

| HCLK | Bus clock |

| HRESETn | Active-low reset |

| HSELx | Selects slave ‘x’ |

| HREADY | Indicates slave readiness |

| HRESP | Slave response (OKAY/ERROR) |

Data flows only when HREADY = 1, and transfers happen in single cycles for best efficiency.

ASB: AHB’s Simpler Sibling

While AHB handles 32/64/128-bit masters, ASB is better suited for 16-bit or 32-bit systems. It’s a simplified version — used in designs where the bandwidth needs aren’t as aggressive.

Functionally, ASB works much like AHB:

- Master makes a request to an arbiter.

- Decoder selects the appropriate slave.

- Data is transferred in single or multiple cycles.

- Wait states and bus grants still apply.

But unlike AHB, ASB lacks features like:

- Burst transfers

- Split transactions

- High data width

You’d typically find ASB in simpler embedded designs or legacy systems.

Why Bridges Matter: AHB-APB, ASB-APB

You can’t connect a fast bus directly to a slow one. Doing that would mess up timing and waste cycles. That’s why AHB-APB or ASB-APB bridges are introduced — they act as translators between the high-speed and low-speed domains.

These bridges:

- Buffer and forward data correctly

- Handle protocol mismatches

- Maintain system integrity

Key Metrics That Define Bus Performance

To decide whether to go with AHB, ASB, or APB — you need to consider the two key performance indicators of any bus:

| Metric | Definition |

|---|---|

| Bandwidth | How much data can be moved per second (bytes/sec) |

| Latency | How long it takes for data to go from source to destination |

There’s always a trade-off. AHB gives you high bandwidth, low latency, but consumes more power and area. APB gives you low power, low cost, but higher latency and limited data rates.

Why AMBA Still Matters in 2025 ?

I’ve worked with teams trying to stitch together a functional SoC without a standard interconnect like AMBA — and it’s always a mess. Custom buses might work in the short term, but they rarely scale well. You end up spending more time debugging interfaces than building actual functionality.

That’s why AMBA isn’t just a protocol — it’s an ecosystem.

Key Benefits of Using AMBA

Here’s what you’re really getting when you adopt AMBA for your SoC or ASIC:

| Benefit | What It Means |

|---|---|

| IP Reusability | You can reuse verified blocks like UART, DMA, or AXI interconnects without redesigning the interface. |

| Vendor Compatibility | Most commercial IP blocks (from ARM, Synopsys, etc.) already follow AMBA. No glue logic needed. |

| Design Scalability | Whether you’re building a tiny microcontroller or a massive AI accelerator, AMBA scales. Use APB for slow parts, AXI for fast ones. |

| Optimized Silicon Use | Efficient buses reduce routing congestion, die area, and power. |

| Tool Support & Ecosystem | Simulation tools, verification frameworks, and even EDA flows are optimized for AMBA-based designs. |

Real-World Adoption

AMBA is not just confined to ARM-based processors anymore.

You’ll find it in:

- IoT chips

- Smartphones

- Networking SoCs

- AI inference chips

- Automotive microcontrollers

Designers pick AMBA not just because it’s familiar, but because it’s reliable and interoperable. Even RISC-V cores today are being paired with AXI or APB interfaces to stay AMBA-compliant.

Closing Thoughts

At WireUnwired, I believe AMBA’s real strength lies in how invisible it is. You don’t notice it — and that’s the point. It works quietly underneath, keeping the SoC functional, scalable, and easy to maintain.

In a world where chip complexity is exploding, and IP blocks come from different teams — or even different vendors — having a common interconnect like AMBA isn’t just useful. It’s essential.

Image Sources :WireUnwired, Radha Kulkarni Blogs

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.