Small Language Models (SLMs) are AI models that are essentially large language models but smaller and more lightweight. These SLMs are now booming in the market and are believed to have great potential. The current times will be remembered in history for remarkable advancements in the field of Artificial Intelligence. Some well-known SLMs include GPT-2 Small, DistilBERT, and ALBERT.

Let’s understand what SLMs are and how they work.

What Are SLMs?

SLM stands for Small Language Models. SLMs are similar to LLMs (Large Language Models) in generating human-like language. However, they are trained on fewer parameters and smaller datasets, making them:

- Easier to train and use

- Requiring less computation power

- More cost-effective

- Performant in specific use cases due to their smaller size

The use of fewer parameters enables them to operate on hardware with low computing power and memory.

One of the most significant advantages of SLMs is their convenience on simpler hardware, leading to considerable cost savings. If you need basic functionality without many extra features, SLMs are a remarkably better choice. Moreover, they can be fine-tuned to accomplish specific tasks efficiently.

TRENDING

How Are SLMs Different from LLMs?

As we know, Large Language Models (LLMs) are powerful but their huge size makes handling smaller tasks more challenging. SLMs address this issue.

The most noticeable difference is the number of parameters. Every parameter represents a connection between nodes in a neural network. A billion-parameter model has a billion such connections. This difference in size directly impacts:

- Training time: LLMs take months to train, while SLMs can be trained in just days.

- Cost and resources: SLMs require fewer resources, making them suitable for low-power applications.

| Feature | SLMs | LLMs |

|---|---|---|

| Size | Small, with fewer parameters (e.g., millions to low billions). | Large, with billions to trillions of parameters. |

| Training Time | Relatively quick (days to weeks). | Long (weeks to months). |

| Computational Power | Requires less computational power, making it suitable for simpler devices. | Needs high computational resources and specialized hardware (GPUs, TPUs). |

| Cost | Cost-effective to train and deploy. | Expensive to train and deploy. |

| Use Cases | Ideal for lightweight tasks and applications requiring efficiency. | Best for complex tasks and applications requiring advanced capabilities. |

| Flexibility | Easier to fine-tune for specific tasks. | Offers more general-purpose solutions but requires significant resources to fine-tune. |

| Performance | May compromise on accuracy and quality for complex prompts. | Delivers high-quality and nuanced outputs. |

| Hardware Requirements | Can run on consumer-grade or simpler hardware. | Requires advanced hardware for both training and deployment. |

| Training Data | Trained on smaller datasets. | Trained on extensive and diverse datasets. |

| Applications | Text summarization, sentiment analysis, classification, basic translation. | Advanced natural language understanding, creative writing, large-scale translation. |

| User Base | Growing rapidly due to affordability and efficiency. | Widely adopted by enterprises for large-scale AI tasks. |

Applications of SLMs

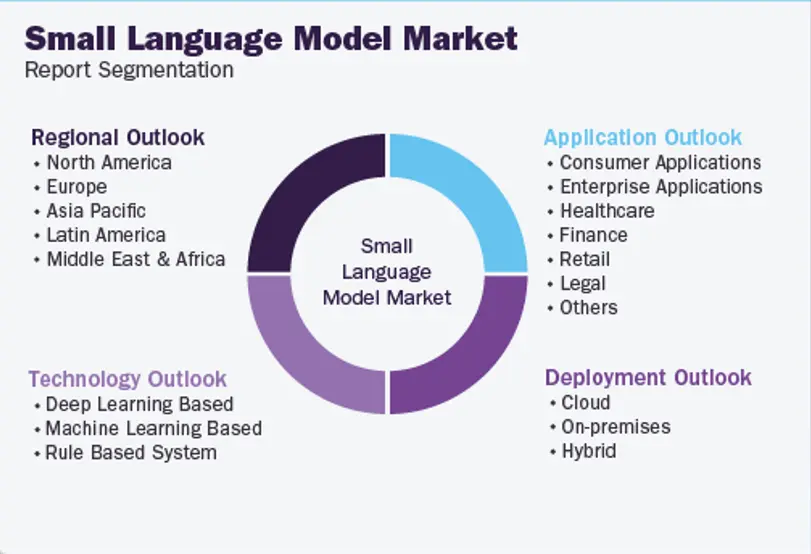

SLMs, due to their cost-effectiveness, find wide applications across industries. Some key uses include:

- Generating Text and Summarization: SLMs excel at generating contextual responses and paraphrasing while maintaining the original meaning.

- Sentiment Analysis: They effectively understand emotions, which is critical for generating appropriate responses and comprehending prompts.

- Personalization: SLMs provide customized, solution-oriented responses.

- Translations: Their ability to understand context and emotions makes them excellent at translating languages.

- Sorting and Classification: They can classify texts and data seamlessly, aiding information retrieval.

- Language Processing: Despite limited resources, SLMs handle language processing tasks efficiently.

The Other Side of the Coin

Like any technology, SLMs have their limitations:

- Their smaller parameter count restricts their ability to compare extensive datasets, making complex problem-solving challenging.

- Outputs from SLMs may sometimes compromise on quality compared to LLMs.

- While cost-effective, fine-tuning SLMs for specific applications might incur additional computational costs.

Overview

Small Language Models (SLMs) are gaining widespread attention due to their lightweight nature, lower resource requirements, and cost-effectiveness. They are easier to train and deploy, making them ideal for applications on devices with limited computational capabilities. SLMs shine in tasks like text generation, sentiment analysis, personalization, translation, sorting, classification, and language processing.

However, their limitations, such as difficulties with complex tasks and quality constraints compared to LLMs, must be considered. Fine-tuning might also require extra resources.

In summary, SLMs are invaluable for cost-sensitive projects and environments, but they may not always match the quality and versatility of LLMs.

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.