⚡

WireUnwired Research • Key Insights

- Breaking News: NVIDIA’s H100 GPU architecture delivers 30X faster inference speeds on large language models and 4X faster training over prior generations.

- Impact: The Transformer Engine with FP8 precision enables trillion-parameter language models to run efficiently across enterprise data centers.

- The Numbers: H100 SXM achieves 3,958 teraFLOPS on FP8 operations, 3.35TB/s memory bandwidth, and up to 700W configurable thermal design power.

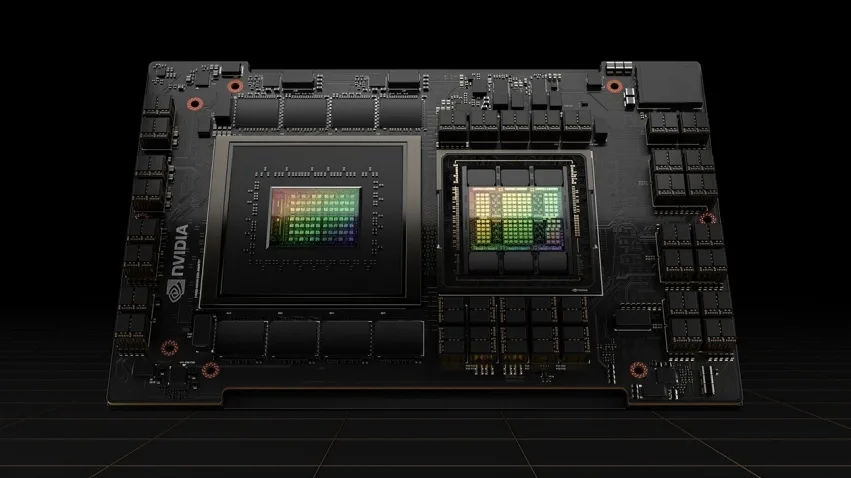

NVIDIA’s H100 Tensor Core GPU represents a fundamental shift in data center acceleration. Built on the Hopper architecture, this processor doesn’t just iterate on previous designs—it reimagines what’s possible for artificial intelligence and scientific computing.

The core innovation lies in the fourth-generation Tensor Cores paired with a dedicated Transformer Engine. This combination handles FP8 precision natively, a critical breakthrough for training and deploying massive language models. For context, GPT-3 contains 175 billion parameters. The H100 can train such models up to 4X faster than its predecessor, the A100.

Performance Metrics That Matter : Nvidia H100 SXM

Raw specifications tell part of the story. The H100 SXM variant delivers 34 teraFLOPS of FP64 computing—triple the performance of prior generations for double-precision workloads. This matters for high-performance computing applications where accuracy cannot be compromised.

For AI workloads, the numbers become staggering. FP8 Tensor Core operations reach 3,958 teraFLOPS. The GPU carries 80GB of high-bandwidth memory with 3.35TB/s bandwidth.

These specifications aren’t marketing speak—they directly translate to faster model training, lower inference latency, and the ability to handle datasets that would have required multiple servers just years ago.

The PCIe variant trades some performance for flexibility, delivering 2TB/s memory bandwidth and fitting into standard enterprise infrastructure without specialized power or cooling requirements.

Connectivity and Scalability of Nvidia H100

A GPU is only as powerful as its ability to communicate with others. The H100 features fourth-generation NVLink, delivering 900 gigabytes per second of GPU-to-GPU interconnect—over 7X faster than PCIe Gen5. This matters enormously when scaling from single-GPU inference to massive clusters.

When paired with NVIDIA’s Grace CPU, the H100 achieves an ultra-fast chip-to-chip interconnect delivering 900GB/s of bandwidth. This architecture delivers up to 30X higher aggregate system memory bandwidth compared to today’s fastest servers and up to 10X higher performance for applications handling terabytes of data.

For those building massive clusters, the H100 supports NDR Quantum-2 InfiniBand networking, which accelerates communication across every GPU and node. Systems can scale to 256 H100s while maintaining efficiency.

Power Efficiency and Thermal Design of H100 SXM

The H100 SXM operates at up to 700W configurable thermal design power. The PCIe variant runs at 300-350W. These numbers matter for data center operators calculating power budgets and cooling requirements. Despite the massive performance gains, the H100 achieves better performance-per-watt than previous generations—a critical consideration for operational costs.

Real-World Impact of H100 on Language Models

The practical implications are profound. For large language models up to 70 billion parameters—like Llama 2 70B—the H100 NVL with NVLink bridge increases performance up to 5X over A100 systems while maintaining low latency in power-constrained environments. This means enterprises can deploy state-of-the-art conversational AI without building new data centers.

Inference speeds improve by up to 30X on large language models. For organizations running inference at scale—handling millions of user queries daily—this translates directly to reduced infrastructure costs and faster response times.

Beyond AI: HPC and Scientific Computing

The H100 isn’t exclusively an AI processor. For high-performance computing, the GPU includes new DPX (Dynamic Programming) instructions delivering 7X higher performance over the A100 and 40X speedups over CPUs on algorithms like Smith-Waterman for DNA sequence alignment and protein structure prediction.

This matters for researchers tackling genomics, drug discovery, climate modeling, and materials science. The combination of massive floating-point throughput and specialized instructions accelerates time to discovery for critical scientific challenges.

Multi-Instance GPU and Flexibility

Enterprise customers often need flexibility. The H100 supports Multi-Instance GPU (MIG) technology, allowing a single GPU to partition into up to 7 independent instances with 10GB each. This enables better resource utilization when running diverse workloads simultaneously—some inference tasks, some analytics, some training jobs—all on the same physical hardware.

If you’re tracking developments in enterprise AI infrastructure and want to stay ahead of deployment trends, join WireUnwired Research on LinkedIn for in-depth analysis and exclusive insights into data center technology adoption.

The Competitive Landscape and Looking Forward

The H100 represents the current peak of data center GPU acceleration. Its architecture innovations—the Transformer Engine, fourth-generation Tensor Cores, advanced interconnects—establish the foundation for the next wave of AI applications

Further ,The H100 arrives at a moment when demand for AI acceleration vastly exceeds supply. Enterprises building generative AI applications, fine-tuning large language models, and scaling inference workloads all compete for limited GPU inventory. The architecture’s efficiency and performance make it the preferred choice for organizations with serious AI ambitions.

For data analytics workloads, the combination of massive memory bandwidth (3.35TB/s), GPU-accelerated Spark 3.0, and NVIDIA RAPIDS enables processing of datasets that would overwhelm traditional CPU-based systems. Organizations can now perform real-time analytics on terabyte-scale datasets.

As organizations move beyond proof-of-concept AI projects toward production deployments, the H100’s combination of raw performance, flexibility, and efficiency makes it the infrastructure choice for serious AI work.

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.