What’s the first thing that comes to your mind when I say “large language models”? Probably something like ChatGPT, Gemini, Claude, or Meta’s LLaMA — right? And if I ask you what’s powering these models? The answer is almost always the same — GPUs. Expensive, power-hungry GPUs, often from one company: NVIDIA.

But what if I told you there’s an Indian platform that’s trying to flip this entire equation?

Yes, you heard that right.

We’re talking about Kompact AI — a CPU-first AI stack developed by Ziroh Labs, in collaboration with IIT Madras (Indian Institute of Technology, Madras). It promises to run modern AI models — from LLaMA 3 to BERT and even RAG pipelines — entirely on CPUs, with zero dependency on GPU clusters or cloud-based infrastructure. And that changes a lot — not just for India, but for how the world might think about building accessible, affordable, and sovereign AI.

Also Read :TSMC Holds Stakes in Over 6 Major Semiconductor Companies: Intel Just the Latest Addition.

What Is Kompact AI?

Kompact AI is an innovative, CPU-first AI platform developed by Ziroh Labs, in partnership with IIT Madras. Unlike conventional AI models, which rely heavily on power-hungry GPUs or cloud-based infrastructure, Kompact AI takes a different approach — it is built to run large language models (LLMs) and other AI workloads entirely on CPUs without compromising on performance.

In fact, the company claims that Kompact AI’s architecture is designed to handle a wide range of AI tasks, including inference, fine-tuning, and even training complex models, all while maintaining efficiency and scalability.

With its ability to support various workflows, Kompact AI offers a versatile solution for developers and enterprises looking to deploy AI at scale.

Why CPU-First? The Benefits Over GPUs

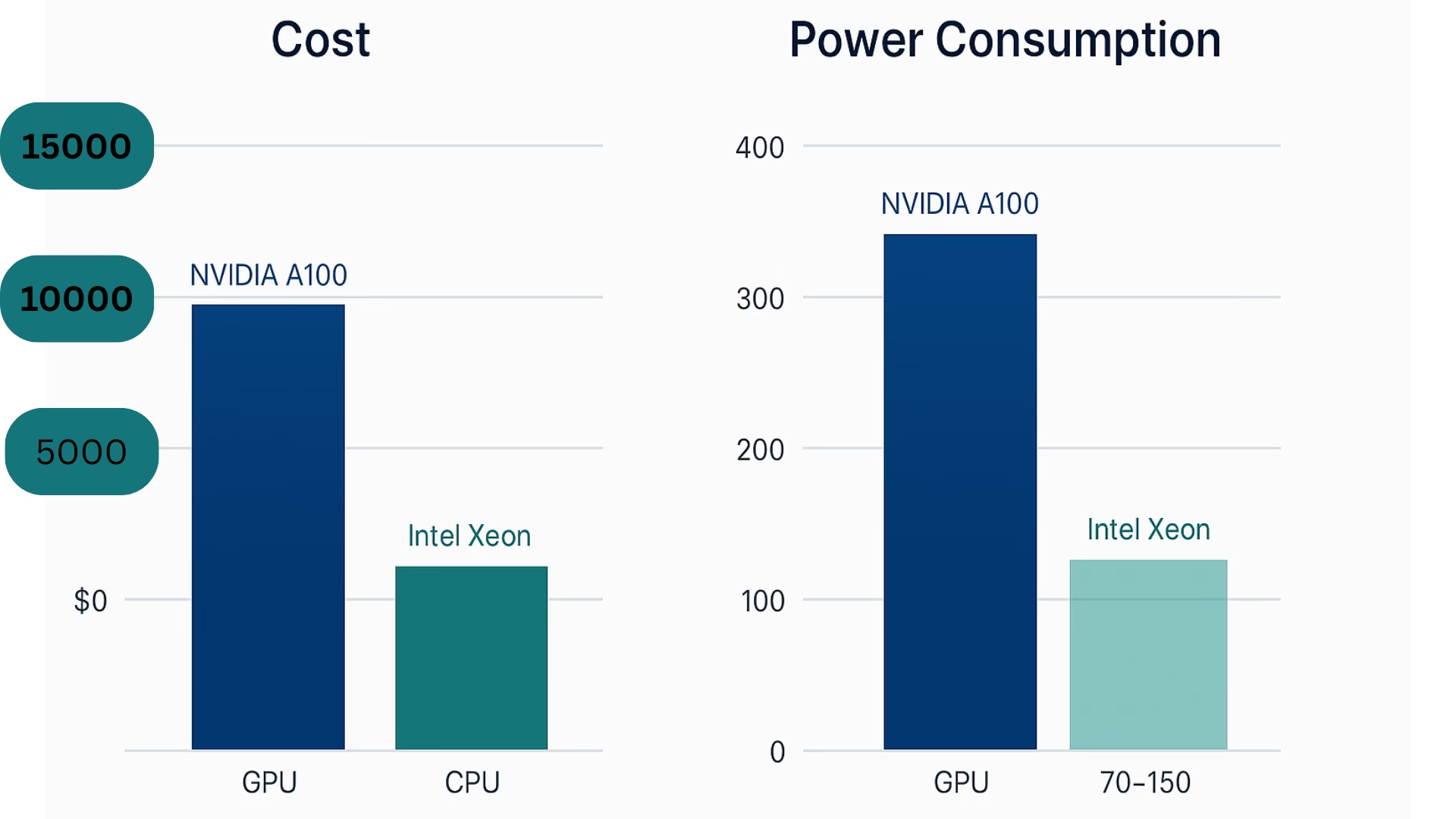

The CPU-first approach gives Kompact AI several benefits, particularly in cost-efficiency, energy efficiency, and accessibility, based on generalised industry data.

Note: Although there is limited data available on the exact cost-effectiveness and energy efficiency of Kompact AI compared to traditional GPU-based solutions, the following points are based on generalized data from similar CPU and GPU technologies, and should be taken as rough estimates.

- Cost Efficiency: CPUs are typically much more affordable than GPUs, with high-performance Intel Xeon processors costing between $1,000–$3,000 compared to NVIDIA A100 GPUs, which range from $10,000–$15,000. This makes Kompact AI a cost-effective solution for businesses, particularly for those in regions with limited access to GPUs or constrained budgets.

- Energy Efficiency: GPUs tend to consume much more power than CPUs—for example, the NVIDIA A100 can draw up to 400 watts, whereas Intel Xeon processors use between 70–150 watts. This means that CPUs can consume approximately 50% to 80% less power than GPUs, leading to significant operational cost savings, especially in environments where energy efficiency is crucial, such as remote or off-grid locations.

- Accessibility and Scalability: CPUs are more widely available and accessible than GPUs, especially in regions where high-end GPUs are difficult to obtain or prohibitively expensive. Kompact AI allows businesses of all sizes to scale their AI applications without the need for heavy infrastructure investments, making it a versatile and scalable solution for a variety of industries.

- Ideal for Inference and Edge Applications: While GPUs are better suited for large-scale model training, CPUs are well-suited for inference and fine-tuning tasks. This makes Kompact AI particularly beneficial for real-time AI applications and edge devices, where local processing and low power consumption are essential.

How does Kompact AI work?

Unlike traditional models that heavily rely on GPUs, Kompact AI integrates sophisticated optimisations at multiple levels to ensure its architecture is both powerful and energy-efficient on CPUs. Below are some key innovations driving the platform’s unique capabilities:

Again, there is very little data available on the internet, but I have tried to incorporate what I think should have been used and some data I found.

🛠️ 1. Custom Kernel-Level CPU Optimisations

Kompact AI goes beyond user-space libraries and taps into OS/kernel-level optimizations to improve CPU performance. This approach is likely similar to LLVM-level tuning, which was popularised by Google’s XLA (Accelerated Linear Algebra) for TPUs. By unlocking latent performance potential in CPUs, Kompact AI can achieve higher throughput and more efficient processing without relying on specialised hardware like GPUs. This vertical software-hardware alignment ensures that the entire stack, from the operating system to the application, is optimised for maximum efficiency.

🧩 2. Hybrid Modular Architecture

Unlike traditional AI models that rely on a single large model (e.g., a monolithic LLM), Kompact AI’s architecture may incorporate a hybrid modular approach, integrating multiple smaller models that can operate in parallel or cooperate. This modular design could enable micro-agent systems that perform specific tasks such as retrieval, reasoning, or control. These models could interact via a shared controller, allowing them to dynamically allocate resources based on the task at hand. This approach is highly efficient on CPUs, as it reduces the need for extensive computation and memory resources typically required by large monolithic models.

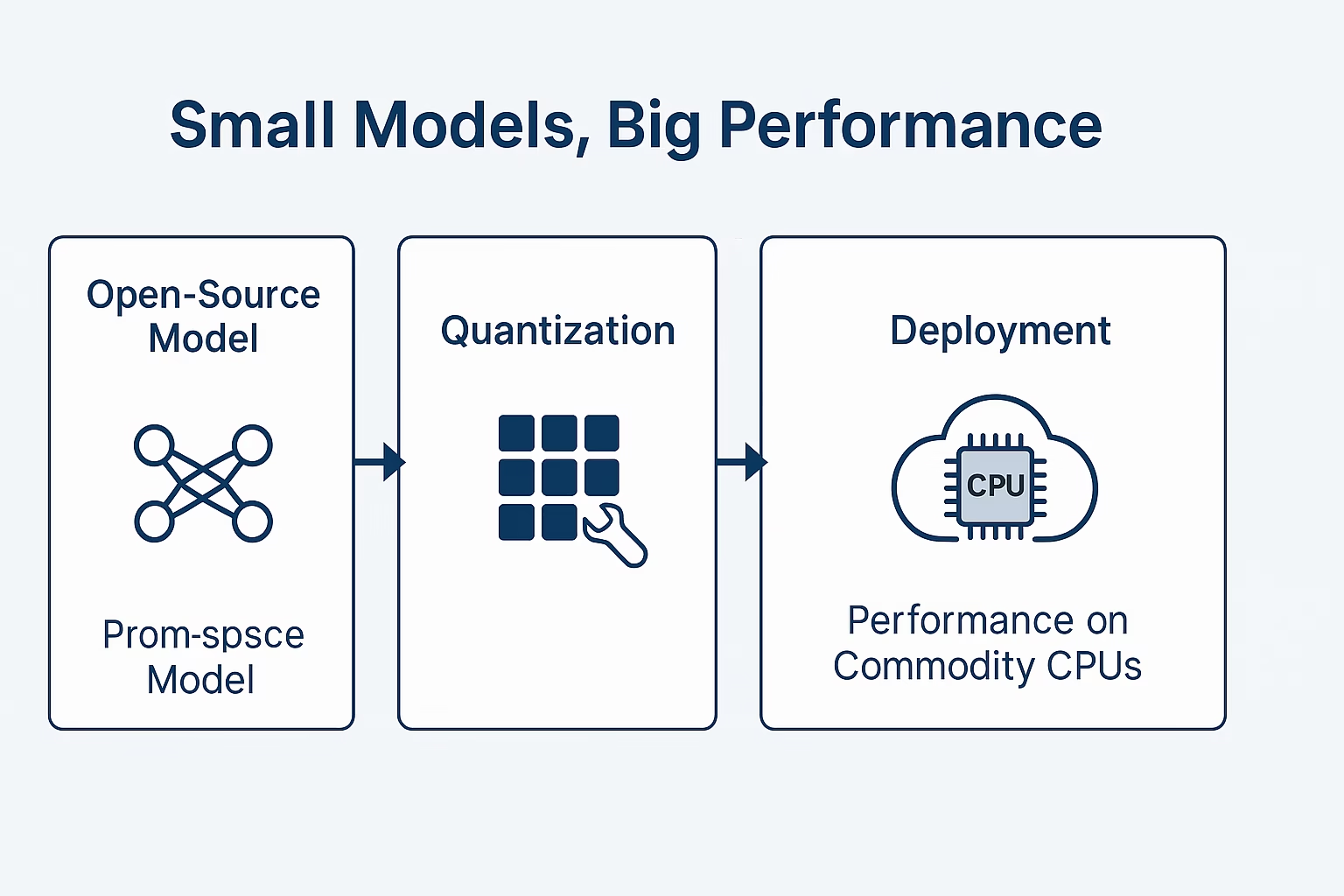

🧪 3. Focus on “Small Models with Big Performance”

Rather than focusing on creating new, massive models from scratch, Kompact AI appears to emphasise distilled and quantised versions of existing open models, such as Phi-3 mini. This approach helps optimise the performance of smaller models while still achieving impressive results. The platform seems to focus on compiler-style AI optimisation, fine-tuning models for efficient deployment on CPUs. By focusing on FLOPS/$ (floating-point operations per second per dollar), Kompact AI can offer cost-effective solutions for real-world applications, ensuring that even smaller, less complex models can deliver substantial performance.

LLMs CPUfied by Kompact AI

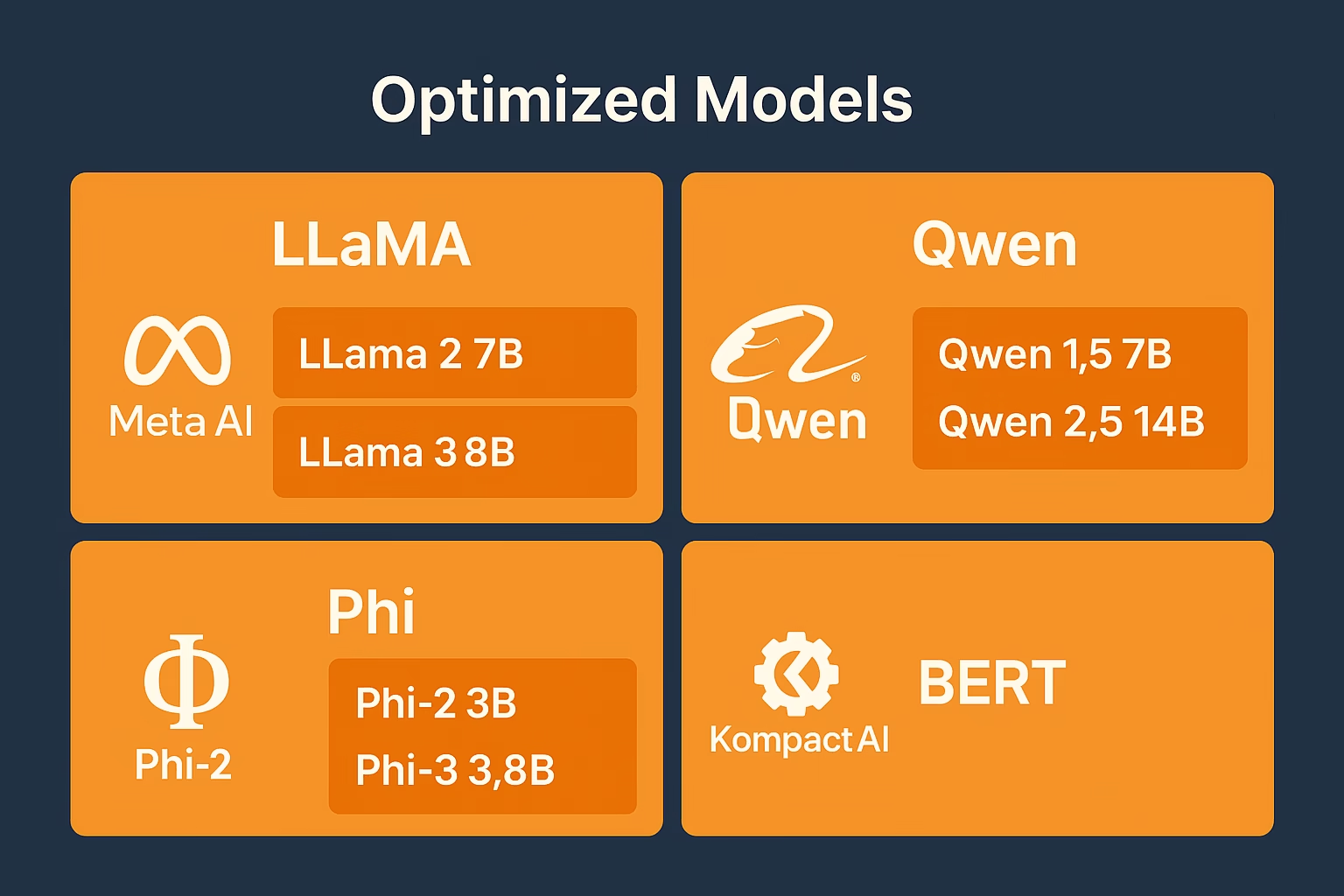

Although the list of LLMs that Kompact AI has optimized is vast, I’ve highlighted some of the most famous and widely recognized models below:

| LLM | Status | Key Features |

|---|---|---|

| DeepSeek-R1-Distill-Llama-8B | Fine-Tuned | Optimized for large-scale NLP tasks, inference, and fine-tuning. |

| Llama 2 7B | Fine-Tuned | Focused on general NLP tasks like question answering and text generation. |

| Code Llama 7B | Fine-Tuned | Specialized for code generation and programming-related tasks. |

| Code Llama 13B | Fine-Tuned | Enhanced for complex code generation and understanding. |

| BERT | Fine-Tuned | Widely used for classification, question answering, and text-based tasks. |

| Llama 2 13B | Fine-Tuned | Suitable for more complex NLP tasks requiring larger models. |

| Nous Hermes Llama2 13B | Fine-Tuned | Custom version of Llama2 designed for enhanced performance. |

| Phi 3 -3.8B | Fine-Tuned | Optimized for a wide variety of NLP tasks with lower computational cost. |

For the complete list you can visit their website

Conclusion:

While Kompact AI seems to be promising, yet its full potential needs to be checked. Mostly how is the performance getting affected needs to be seen .

So , Stay tuned for that WireUnwired article .Till then write in the comments what do you think about Kompact AI ?

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.