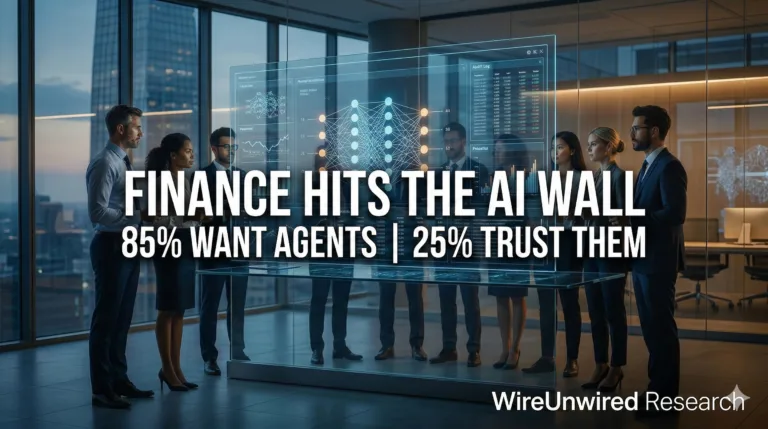

Insurance executives love AI. Their employees don’t trust it. We covered this disconnect recently: 90% of insurance leaders are increasing AI spending while employee usage dropped 10 percentage points and only 40% feel prepared to use these tools. Now the industry is doubling down with agentic AI—autonomous systems that don’t just analyze data but make decisions and take actions.

The pitch sounds compelling. Agentic AI can automate complex workflows, navigate legacy systems, and bypass the technical debt keeping insurers stuck in pilot programs. But if employees already feel unprepared for basic AI tools, how will they respond to systems that autonomously process claims and interact with customers without constant human oversight?

The insurance industry needs this to work. Losses have exceeded $100 billion annually for six consecutive years. Climate-driven property claims are now structural problems that incremental improvements can’t solve. Agentic AI offers a path to compress cycle times and control costs at scale. The question is whether insurers can deploy these systems without making the employee trust problem worse.

⚡

WireUnwired • Fast Take

- Insurers push agentic AI despite employees feeling unprepared for basic AI

- Results where deployed: 30% efficiency gains, 23-day faster processing, 65% fewer complaints

- Only 7% of insurers scaled AI beyond pilots—70% of barriers are organizational, not technical

- Core problem: Same trust gap undermining basic AI now threatens autonomous systems

Traditional AI tools in insurance are mostly analytical ,they flag suspicious claims, predict risk scores and recommend coverage adjustments. Humans then review the output and decide. Agentic AI systems take actions autonomously. They don’t just flag a missing document in a claim. They request it from the customer, update status in multiple systems, and notify relevant parties automatically.

Sedgwick’s deployment with Microsoft demonstrates this. Their Sidekick Agent assists claims professionals with real-time guidance, improving processing efficiency by over 30%. The agent handles routine documentation, data entry, and status updates while humans focus on complex judgment calls.

The more ambitious approach is end-to-end automation. One major insurer deployed over 80 models in its claims domain. Results: complex-case liability assessment time cut by 23 days, routing accuracy improved by 30%, customer complaints dropped 65%. These numbers explain executive enthusiasm. When agentic AI works, it compresses cycle times dramatically while maintaining oversight.

But only 7% of insurers have scaled AI initiatives effectively across their organizations. The barrier isn’t technology—it’s organizational readiness. Research shows 70% of scaling challenges are organizational rather than technical. This is the same dynamic we identified in employee AI adoption: executives invest in tools while the organization isn’t prepared to use them.

Legacy systems create immediate friction. Insurance companies run on decades-old infrastructure, often heavily customized. Integrating agentic AI requires either expensive system replacement or complex integration layers that don’t solve the underlying technical debt. Fragmented data architectures compound this—customer information, policy details, claims history, and billing records often live in separate systems.

Talent shortages limit deployment speed. Actuaries, underwriters, and claims specialists who understand both insurance operations and AI capabilities are scarce. Siloed teams and unclear priorities slow decision-making when cross-functional coordination is required.

Industry guidance recommends establishing an “AI Center of Excellence” for governance and expertise. Start with high-volume, repeatable tasks. Use prebuilt frameworks to reduce implementation time. But this prescriptive approach doesn’t address the core challenge: building trust among employees who will work alongside these systems daily.

Agentic AI deployment succeeds or fails based on employee trust. When an agent autonomously requests documentation, updates multiple systems, and routes a claim, adjusters must trust those actions were appropriate. If they don’t, they’ll manually verify everything the agent did, eliminating efficiency gains.

This is where the employee preparation gap becomes critical. Companies can’t skip foundational AI literacy and jump straight to autonomous agents. Employees need to understand what these systems can and can’t do, when to override them, and how to identify when agents are operating outside appropriate parameters. Without that foundation, deployment creates anxiety rather than augmentation.

The 65% reduction in customer complaints suggests these systems can improve outcomes when implemented well. But “implemented well” requires more than technical integration. It requires training programs that build employee confidence, clear escalation paths when agents make questionable decisions, and transparent communication about which tasks are being automated and why.

The insurance industry faces genuine pressure to deploy agentic AI. Six consecutive years of $100 billion+ losses aren’t sustainable. Climate-driven claims will only intensify. The technology works—30% efficiency improvements and 23-day cycle time reductions prove that.

But technology effectiveness doesn’t guarantee successful adoption. The same disconnect that undermined basic AI tools—executives investing while employees feel unprepared—threatens agentic AI deployment. Seventy percent of scaling challenges being organizational means most failures will stem from inadequate employee readiness, not technological limitations.

Insurers that succeed will invest as heavily in organizational transformation as in technology deployment. That means comprehensive training, transparent communication about automation goals, clear role redefinition for affected employees, and mechanisms for employee input into which processes get automated.

The alternative is expensive AI systems that employees work around rather than with, generating impressive pilot metrics that never scale. The industry can’t afford that outcome, but avoiding it requires addressing the trust gap we identified before—not just deploying more sophisticated technology on top of an unprepared workforce.

For discussions on insurance technology and AI adoption challenges, join our WhatsApp community.

Related: Insurance Executives Love AI—But Employees Don’t Trust It

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.