Introduction:

A. Definition of Multimodal AI(MMAI)

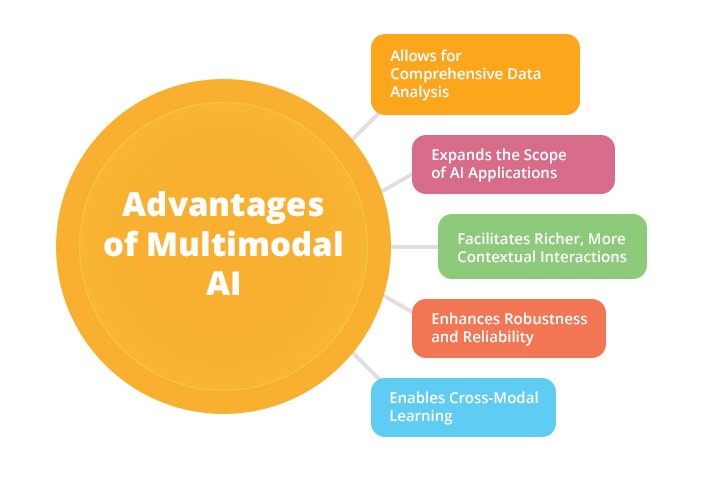

Multimodal AI (MMAI) is a cutting-edge field that aims to build machines that can understand and interact with the world using multiple modalities, such as text, images, audio, and video. Unlike traditional AI systems that focus on one modality at a time, MMAI systems can integrate and leverage information from different sources, creating a richer and more natural representation of reality. MMAI is inspired by how humans perceive and communicate with their environment, using a combination of senses and languages.

B. Why Multimodality Matters

Picture AI that doesn’t just understand language but can see, hear, and interpret like a human. Multimodal AI is the key to unlocking this potential, creating systems that transcend the limitations of single-modality models. It’s not just about data; it’s about how AI comprehends and interacts with the world in a more nuanced and holistic manner.

C. Applications That Redefine Possibilities

MMAI can help doctors diagnose diseases by analyzing medical images and reports, or enable self-driving cars to perceive their surroundings and communicate with other vehicles and pedestrians. MMAI can also improve the quality and safety of social media platforms by detecting and filtering harmful or inappropriate content. MMAI is a key driver of innovation that can enhance human capabilities and experiences.

Modalities in Multimodal AI:

A. Text

Natural Language Processing (NLP):

Welcome to the world of NLP, where machines can not only process words but also grasp the subtleties, emotions, and complexities of human language. NLP is the field of computer science that aims to make machines communicate with humans in natural ways, using text or speech. NLP enables machines to perform tasks such as translation, summarization, sentiment analysis, question answering, and more.

Sentiment Analysis:

Uncover the emotional intelligence of MMAI, capable of discerning sentiments expressed in text, bringing a human touch to digital communication.

Language Translation:

Break language barriers effortlessly as MMAI seamlessly translates across languages, fostering a global community.

B. Images

Computer Vision:

Imagine AI that not only sees images but comprehends them. Computer vision with MMAI goes beyond pixels—it understands context, objects, and scenes.

Object Detection:

Witness the magic of MMAI spotting and identifying objects in images, a boon for applications like autonomous vehicles and security systems.

Image Classification:

Explore the world of image classification, where MMAI categorizes images, making visual information more accessible and meaningful.

C. Audio

Speech Recognition:

Picture an AI that not only hears words but understands them. Speech recognition in MMAI transcribes spoken words, making voice interactions more natural.

Emotion Recognition:

Feel the vibe of MMAI, recognizing emotions in audio signals, opening avenues for more empathetic human-machine interactions.

Speaker Identification:

Dive into the security features of MMAI, identifying and authenticating speakers, ensuring personalized and secure experiences.

D. Video

Action Recognition:

Step into the cinematic world of MMAI, where it not only watches videos but understands human actions, a game-changer for surveillance and analysis.

Video Summarization:

Watch MMAI craft movie-like summaries from videos, distilling key moments for efficient analysis and sharing.

Scene Understanding:

Experience the genius of MMAI understanding video scenes, a crucial skill for applications like augmented reality and video surveillance.

Challenges in Multimodal AI:

A. Data Integration

Handling Heterogeneous Data:

MMAI faces the challenge of blending diverse data types, overcoming the jigsaw puzzle of formats, scales, and granularity.

Alignment of Modalities:

Ensuring harmony among different modalities is the key to meaningful interpretation and decision-making in MMAI.

B. Model Fusion

Combining Outputs from Different Modalities:

Juggling outputs from varied modalities is a balancing act, where MMAI harmonizes conflicting or ambiguous information.

Weighting Modalities:

Like a maestro directing an orchestra, MMAI determines the importance of each modality, assigning weights for optimal performance.

C. Cross-Modal Learning

Transfer Learning Across Modalities:

The art of MMAI lies in transferring knowledge seamlessly across different modalities, ensuring adaptability and generalization.

Shared Representations:

Discover the synergy of MMAI, creating shared representations that enhance efficiency and understanding across modalities.

Techniques and Approaches:

A. Fusion Models

Early Fusion:

It’s like preparing a dish from scratch—MMAI combines modalities at the beginning, creating a joint representation for a flavorful outcome.

Late Fusion:

Picture a chef adding ingredients at different stages. In MMAI, late fusion allows independent processing before blending for a unique flavor.

Hybrid Fusion:

Like a culinary fusion, MMAI strikes a balance, combining the best of both worlds—early and late fusion—for an optimal and satisfying result.

B. Cross-Modal Retrieval

Similarity Learning:

Enter the world of MMAI matchmaking, where it learns the similarities between modalities, enhancing the art of cross-modal retrieval.

Embedding Models:

Imagine MMAI creating a shared language for different modalities, facilitating seamless communication and understanding.

C. Attention Mechanisms

Self-Attention:

Think of MMAI as an attentive friend, focusing on essential elements within the same modality, understanding nuances and details.

Cross-Modal Attention:

Picture MMAI as a skilled mediator, directing attention across different modalities, enhancing overall understanding.

D. Transfer Learning

Pre-training on Large Datasets:

MMAI is like a seasoned traveler—it learns from vast experiences (datasets) and brings that wisdom to new modalities for enhanced performance.

Fine-tuning for Specific Tasks:

Watch MMAI adapt its expertise to specific tasks, fine-tuning its skills for accuracy and efficiency.

Applications:

A. Healthcare

Medical Image Analysis:

Dive into the world of MMAI enhancing medical diagnostics, where visual information becomes a powerful tool for precision.

Voice-Based Diagnostics:

Experience the transformative impact of MMAI in diagnostics, where the human voice becomes a diagnostic powerhouse.

B. Autonomous Vehicles

Sensor Fusion:

Buckle up for a ride into the future with MMAI, where data from various sensors merge seamlessly, enhancing perception and decision-making in autonomous vehicles.

Multimodal Perception:

Witness the magic of MMAI, enabling vehicles to perceive the world through multiple senses for safer and smarter navigation.

C. Human-Computer Interaction

Gesture Recognition:

Step into a world where MMAI understands your gestures, transforming the way humans interact with computers for a more intuitive experience.

Multimodal Interfaces:

Immerse yourself in the realm of interfaces that speak the language of MMAI, providing a natural and seamless connection between humans and machines.

D. Social Media and Content Analysis

Emotion Detection in Text and Images:

Experience the emotional intelligence of MMAI, as it reads between the lines and interprets emotions expressed in both text and images.

Content Moderation:

Let MMAI be your digital guardian, detecting and filtering inappropriate content, creating a safer online environment.

Future Trends:

A. Advancements in Modalities

Imagine a world where each modality evolves to its fullest potential. MMAI is on the forefront, continually advancing image recognition algorithms, natural language processing, and more.

B. Explainability and Interpretability

In the future, MMAI aims to be an open book. Efforts are underway to make its decisions more transparent and understandable, fostering trust between humans and machines.

C. Ethical Considerations

As MMAI becomes woven into the fabric of daily life, ethical considerations take center stage. The focus is on addressing bias, ensuring privacy, and maintaining security to create a fair and responsible AI ecosystem.

D. Integration with Generative Models

Picture a world where MMAI collaborates with generative models, creating content that’s not just informative but also vivid and contextual.

Multimodal AI. DoFollow the given link to know more about Multimodal AI.

Conclusion:

A. Recap of Multimodal AI

In a nutshell, Multimodal AI is about experiencing the world in all its richness, combining information from various sources to create a more comprehensive understanding.

B. Potential Impact on Various Industries

The impact of Multimodal AI extends beyond imagination, revolutionizing healthcare, transportation, human-computer interaction, and social media.

C. Future Prospects and Challenges

The future of Multimodal AI is a thrilling journey filled with possibilities and challenges. From seamless integrations to ethical considerations, the path ahead promises to shape a smarter and interconnected world. Get ready to witness the evolution of intelligence as we know it!

Discover more from WireUnwired Research

Subscribe to get the latest posts sent to your email.